Joke's on you I can't afford an HDR display & also I'm colorblind.

You can still profit from the increase in brightness and contrast! Doesn’t make a good HDR screen any cheaper though…

HDR? Ah, you mean when videos keep flickering on Wayland!

I will switch when I need a new GPU.

Now that explicit sync has been merged this will be a thing of the past

And it was never a thing on AMD GPUs.

videos? everything flickers for me on wayland. X.org is literally the only thing keeping me from switching back to windows right now.

Wayland has started to support Explicit Sync which can fix the behavior of Nvidia's dumpster fire of a driver

You want to win me over? For starters, provide a layer that supports all hooks and features in xdotool and wmctrl. As I understand it, that's nowhere near present, and maybe even deliberately impossible "for security reasons".

I know about ydotool and dotool. They're something but definitely not drop-in replacements.

Unfortunately, I suspect I'll end up being forced onto Wayland at some point because the easy-use distros will switch to it, and I'll just have to get used to moving and resizing my windows manually with the mouse. Over and over. Because that's secure.

I think the Wayland transition will not be without compromises

May I ask why you don't use tiling window managers if you don't like to move windows with the mouse?

Unfortunately, I suspect I’ll end up being forced onto Wayland at some point because the easy-use distros will switch to it, and I’ll just have to get used to moving and resizing my windows manually with the mouse. Over and over. Because that’s secure.

I think you were being sarcastic but it is more secure. Less convenient though.

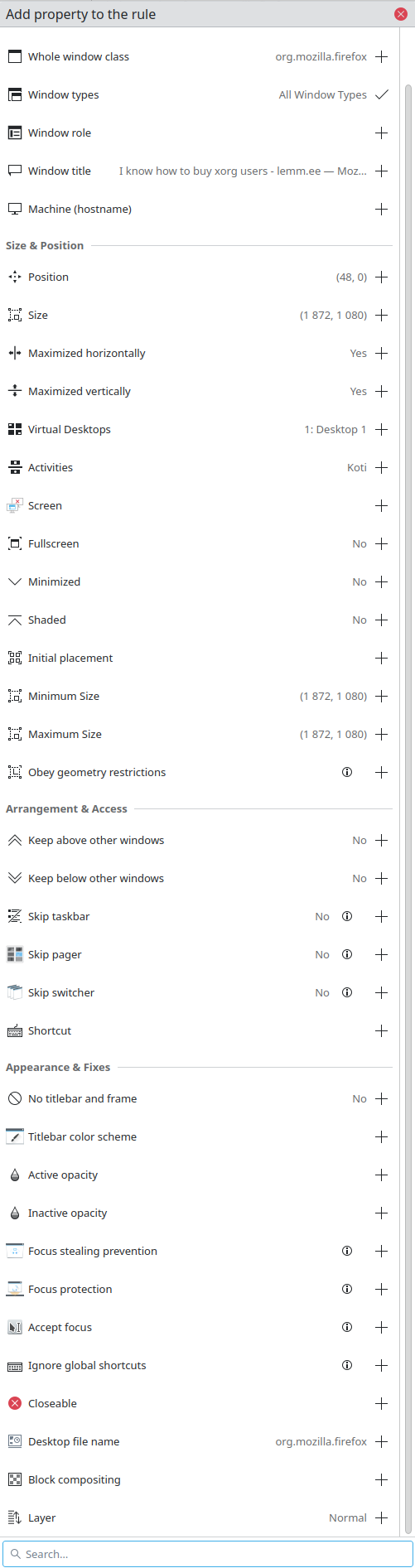

I'm not sure if that's what you're looking for but KDE has nice window rules that can affect all sorts of settings. Placement, size, appearance etc. Lot of options. And you can match them per specific windows or the whole application etc. I use it for few things, mostly to place windows on certain screens and in certain sizes.

I think it's possible to make such a tool for Wayland, but in Wayland stuff like that are completely on the compositor

So, ask the compositor developers to expose the required shit and you can make such a tool

hahahaha tell that to nvidia users

Smart Nvidia users are ex Nvidia users

Actually wait until the next de releases hit repos, all the nvidia problems just got solved

I'm a happy Nvidia user on Wayland. Xorg had a massive bug that forced me to try out Wayland it has been really nice and smooth. I was surprised, seeing all the comments. But I might've just gotten lucky.

How will HDR affect the black screen I get every time I've tried to launch KDE under Wayland?

It will make your screen blacker.

But it all seriousness, your display manager might not support Wayland. Try something like ssdm.

I'm using SDDM and it lets me select Wayland as an option just fine, Just my Nvidia card won't load my desktop then.

I'm going full AMD next time I upgrade but that's gonna be a while away...

Nothing you can do then. Stay on x11 until nvidia get their shit together.

It should work with the latest Nvidia driver. It does for me.

OK but can you please call NVidiachan? I know you two don't get along but maybe you can ask her for some support?

NVidiachan is busy selling GPUS for AI, but she is also working on adding explicit sync

I'm not touching Wayland until it has feature parity with X and gets rid of all the weird bugs like cursor size randomly changing and my jelly windows being blurry as hell until they are done animating

Not sure why your getting down voted, I wish I could switch, but only X works reliability.

It's not ready yet.

The protocol for apps/games to make use of it is not yet finalized.

The protocol won't be "finalized" for a long time as new shit is proposed every day.

But, for me and many others, it has had enough protocols to work properly for some years now. Right now I'm using Wayland exclusively with some heavy workloads and 0 issues.

Me, not much of a gamer and not a movie buff and having no issues with the way monitors have been displaying things for the past 25 years: No.

When I could no longer see the migraine-inducing flicker while being irradiated by a particle accelerator shooting a phosphor coated screen in front of my face, I was good to go.

It was exciting when we went from green/amber to color!

Been watching this drama about HDR for a year now, and still can't be arsed to read up on what it is.

HDR makes stuff look really awesome. It's super good for real.

HDR or High Dynamic Range is a way for images/videos/games to take advantage of the increased colour space, brightness and contrast of modern displays. That is, if your medium, your player device/software and your display are HDR capable.

HDR content is usually mastered with a peak brightness of 1000nits or more in mind, while Standard Dynamic Range (SDR) content is mastered for 80-100nit screens.

How is this a software problem? Why can't the display server just tell the monitor "make this pixel as bright as you can (255) and this other pixel as dark as you can (0)?

In short: Because HDR needs additional metadata to work. You can watch HDR content on SDR screens and it’s horribly washed out. It looks a bit like log footage. The HDR metadata then tells the screen how bright/dark the image actually needs to be. The software issue is the correct support for said metadata.

I‘d speculate (I’m not an expert) that the reason for this is, that it enables more granularity. Even the 1024 steps of brightness 10bit colour can produce is nothing compared to the millions to one contrast of modern LCDs or even near infinite contrast of OLED. Besides, screens come in a number of peak brightnesses. I suppose doing it this way enables the manufacturer to interpret the metadata to look more favorably on their screens.

And also, with your solution, a brightness value of 1023 would always be the max brightness of the TV. You don’t always want that, if your TV can literally flashbang you. Sure, you want the sun to be peak brightness, but not every white object is as bright as the sun… That’s the true beauty of a good HDR experience. It looks fairly normal but reflections of the sun or the fire in a dark room just hit differently, when the rest of the scene stays much darker yet is still clearly visible.

without any interruption to gaming compability I definitely don't want to switch sorry.

HDR is cool and I look forward to getting that full game compability and eventually making the switch but it's just not there yet

HDR is almost useless to me. I'll switch when wayland has proper remote desktop support (lmk if it does but I'm pretty sure it does not)

Seems like there's a bunch of solutions out there:

As of 2020, there are several projects that use these methods to provide GUI access to remote computers. The compositor Weston provides an RDP backend. GNOME has a remote desktop server that supports VNC. WayVNC is a VNC server that works with compositors, like Sway, based on the wlroots library. Waypipe works with all Wayland compositors and offers almost-transparent application forwarding, like ssh -X.

Do these not work for your use case?

Kde on Wayland doesn't even have sticky keys.

Does wine run on wayland?

Edit, had to look up wth HDR is. Seems like a marketing gimmick.

Anti Commercial AI thingy

CC BY-NC-SA 4.0

Inserted with a keystroke running this script on linux with X11

#!/usr/bin/env nix-shell

#!nix-shell -i bash --packages xautomation xclip

sleep 0.2

(echo ' spoiler Anti Commercial AI thingy

[CC BY-NC-SA 4.0](https://creativecommons.org/licenses/by-nc-sa/4.0/)

Inserted with a keystroke running this script on linux with X11

```bash'

cat "$0"

echo '```

:::') | xclip -selection clipboard

xte "keydown Control_L" "key V" "keyup Control_L"

:::

It isn't, it's just that marketing is really bad at displaying what HDR is about.

HDR means each color channel that used 8 bits can now use 10 bits, sometimes more. That means an increase of 256 shades per channel to 1024, allowing a higher range of shades to be displayed in the same picture, and avoiding the color banding problem:

That’s just 10 bit color, which is a thing and does reduce banding but is only a part of the various HDR specs. HDR also includes a significantly increased color space, as well as varying methods (depending on what spec you’re using) of mapping the mastered video to the capabilities of the end user’s display. In addition, to achieve the wider color gamut required HDR displays require some level of local dimming to increase the contrast by adjusting the brightness of various parts of the image, either through backlight zones in LCD technologies or by adjusting the brightness per pixel in LED technologies like OLED.

Thank you.

I assume HDR has to be explicitly encoded into images (and moving images) then to have true HDR, otherwise it's just upsampled? If that's the case, I'm also assuming most media out there is not encoded with HDR, and further if that's correct, does it really make a difference? I'm assuming upsampling means inferring new values and probably using gaussian, dithering, or some other method.

Somewhat related, my current screens support 4k, but when watching a 4k video at 60fps side by side on a screen at 4k resolution and another 1080p resolution, no difference could be seen. It wouldn't surprise me if that were the same with HDR, but I might be wrong.

Anti Commercial AI thingy

CC BY-NC-SA 4.0

Inserted with a keystroke running this script on linux with X11

#!/usr/bin/env nix-shell

#!nix-shell -i bash --packages xautomation xclip

sleep 0.2

(echo ' spoiler Anti Commercial AI thingy

[CC BY-NC-SA 4.0](https://creativecommons.org/licenses/by-nc-sa/4.0/)

Inserted with a keystroke running this script on linux with X11

```bash'

cat "$0"

echo '```

:::') | xclip -selection clipboard

xte "keydown Control_L" "key V" "keyup Control_L"

:::

yes, from the capture (camera) all the way to distribution the content has to preserve the HDR bit depth. Some content on YouTube is in HDR (that is noted in the quality settings along with 1080p, etc), but the option only shows if both the content is HDR and the device playing it has HDR capabilities.

Regarding streaming, there is already a lot of HDR content out there, especially newer shows. But stupid DRM has always pushed us to alternative sources when it comes to playback quality on Linux anyway.

no difference could be seen

If you're not seeing difference of 4K and 1080p though, even up close, maybe your media isn't really 4k. I find the difference to be quite noticeable.

linuxmemes

I use Arch btw

Sister communities:

- LemmyMemes: Memes

- LemmyShitpost: Anything and everything goes.

- RISA: Star Trek memes and shitposts

Community rules

- Follow the site-wide rules and code of conduct

- Be civil

- Post Linux-related content

- No recent reposts

Please report posts and comments that break these rules!