Welcome to another episode! The BBC joins the fediverse, and content moderation remains the most important conversation in the fediverse. My unscientific vibe-o-meeter also sees more discussions around content moderation and the

The BBC has launched their own Mastodon server this week, announcing their presence in an extensive blog post. It is a private server, only intended for accounts from the BBC, such as Radio 4 and 5 Live. The R&D department of the BBC established the server as an experimental project that will run for six months. After that, the BBC will evaluate whether and how to continue.

In the blog post, the BBC talks about the challenges they have run into while setting up a presence on the fediverse. They note that explaining the decentralised, federated model is hard when people are mostly familiar with centralised ownership models, as well as the resulting questions about hosting user content. Moderation is also a bit of an open question, as it relies on trust that other 3rd party servers will moderate their users properly. The BBC comes from a model where they are responsible for comments (on their own website for example), and have all the necessary tools to moderate comments properly that do not meet their guidelines. Here, they are dependent on other server’s moderation to take action when required.

The entrance of the BBC into the fediverse comes at a time when news organisations are actively exploring how move forward with social media. The situation in Canada is most notable for this, as a result of Online News Act, Google and Meta will have to pay Canadian news organisations for posts made on their platform that link to their sites. Meta has been threatening for a while that the passing of this bill will result in them banning news altogether, and this week actually banned all links to news (both Canadian and international) organisations for all Canadian users. News organisations setting up their own social media server on the fediverse seems to be a possible way out of this impasse, but for now, nothing has been said about this.

Meanwhile, over at Meta, employees at Thread seem to be acute aware of the BBC launching the Mastodon server. A Threads engineer states, in response to the BBC news: “we’ve been following this news internally with excitement. no updates on our side to share yet”. Threads have consistently stated their intent to add ActivityPub support to Threads. They have also stated multiple times not to be interested in hosting news and political content. News organisations posting their own content on their self-hosted fediverse servers thus fits right in with Meta’s thinking. This is something I wrote about earlier as well, and Threads employees being excited about this scenario playing out further points into this direction of why Meta is stating to add ActivityPub support.

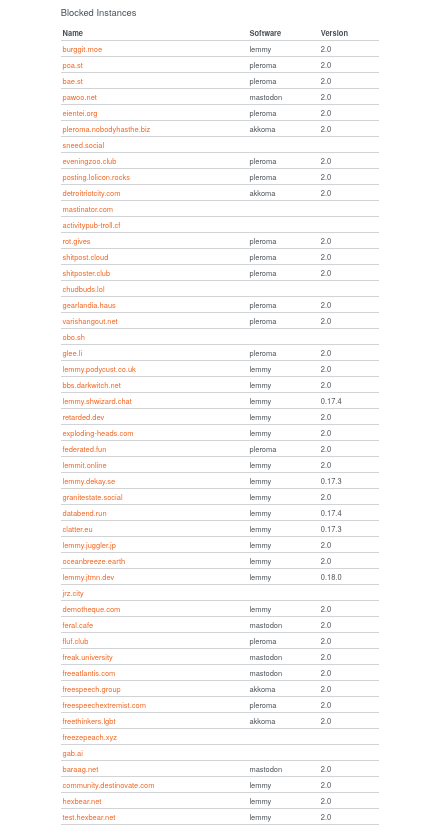

Another direction that the conversation around the BBC joining the fediverse was transphobia and server blocking. Many trans people feel uncomfortable with the BBC platforming explicit transphobia. As such, some servers decided to block the BBC Mastodon server as a response. This prompted some interesting and constructive discussions about the extend to which server admins should block servers. On a base level, freedom of association is one of the core principles of a decentralised social network, so people being free to block whichever server they prefer is the system working as intended. However, asking critical questions about if doing so meaningfully contributes to providing safety to your users is also a valid way of holding people accountable for the actions they take on behalf of others. If this is something that interests you, I personally found these two exchanges to be valuable to read, where in both cases, I find the value in the comments where people voice their differences.

In last week’s update I wrote about the Stanford report on CSAM on Mastodon, with an overview of the situation and the promise to keep track of what is happening in the fediverse as a response. WeDistribute also published an extensive article about the findings that is worth reading. It zooms in on the recommendations, and also places it into a larger context on what is at stake with regards to internet regulation as well.

The W3C Social Web Incubator Community Group held a special topic call this week, about the Social Web and CSAM, where the Stanford report was discussed in depth. David Thiel and Alex Stamos, of the Stanford Internet Observatory were also present. Meeting notes and audio recording are available here. Some of my notes and takeaways:

Alex Stamos makes a distinction between three different problems: (1) finding, taking down and reporting CSAM where the material is known in databases such as PhotoDNA. (2) the same, but for material that is new or computer generated. (3) situations where the social media accounts of the victims children are actively involved in the creation of material.

For the first problem, infrastructure exists that institutions can use to automate the scanning, reporting and deletion of CSAM. This however is aimed at large organisations and is not build to handle a federated structure. The second problem is something that centralised social networks struggle with as well. The third problem is something that’s not really a part of the fediverse currently, as it is largely adults who use the fediverse, and it is currently mainly happening on Instagram. If the fediverse grows and different audiences join, this might change however. For now, Alex Stamos recommends focusing on the first problem; how to implement a centralised scanning service into a federated architecture.

Another point came up regarding the effectiveness of adding a standard scanning tool is. Here Alex Stamos is clear, stating that scanning for perceptual hashes is an effective way in greatly reducing people’s ability to trade CSAM.

Regarding the reporting of CSAM two problems are noted: a lack of reporting to NCNEC. US fediverse servers are mandated by law to file a report to NCNEC every time they take down CSAM content. It is unclear if this legal procedure is being followed. At the least, there is a lack of awareness and education for server operations regarding this. Secondly there is a lack of moderation infrastructure, both in automated reporting, as well as in ways to safeguard moderators against both CSAM and violent content. An example of the latter would be making images black and white and blurring, when automated scanning suspect it is an extremely violent video.

The work of IFTAS remains highly interesting to me, in this case the work on providing a centralised intermediary service for the thousands of server operators to gain access to automated CSAM scanning tools.

In other news

Software and other technical news

Artemis, the first Kbin app for Android and iOS has launched in public beta.

Automadon is a new iOS app that allows you to create custom shortcuts for your Mastodon account on iOS.

Two new ways to bring the fediverse to your Apple Watch: Stomp allows you to see your Mastodon timeline (via TechCrunch) and Voyager reports having an app in Testflight to check your Lemmy account on your Apple Watch!

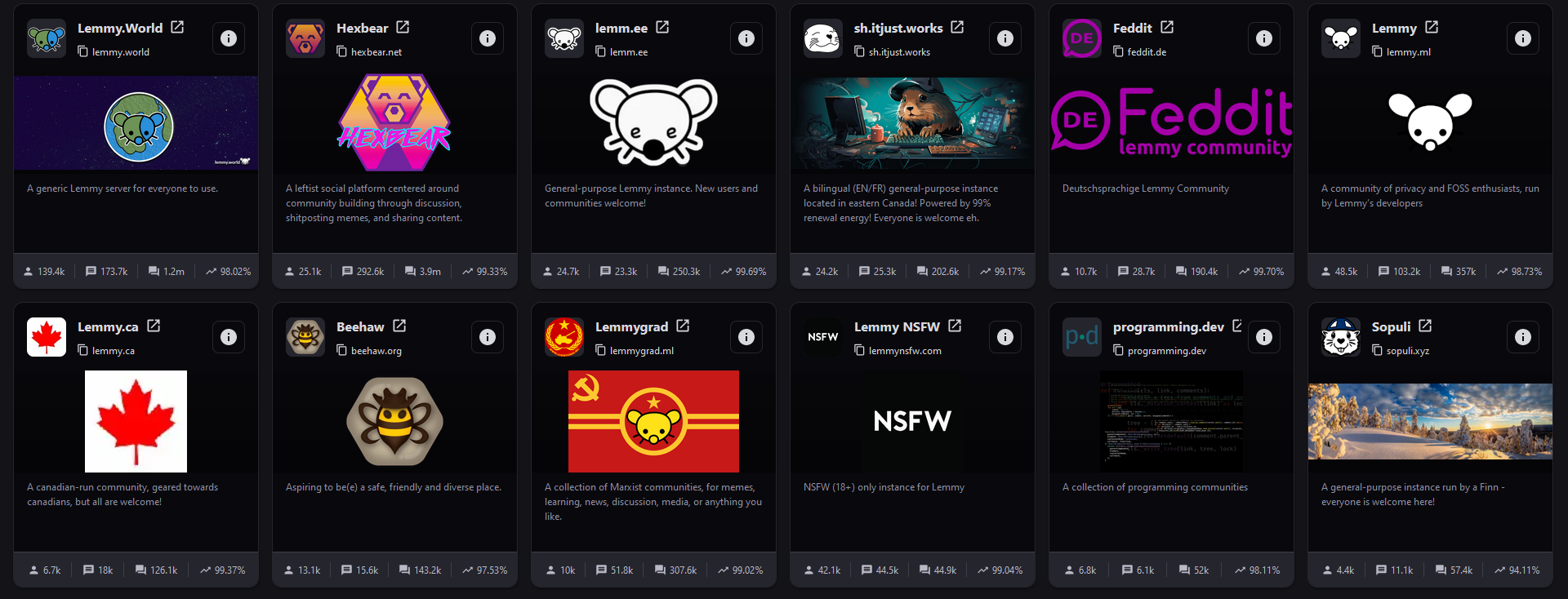

Reddit third party app Sync is back, but as a Lemmy app.

Daniel Supernault, the creator of Pixelfed, reports that he has started work on an open source encrypted fediverse instant messenger, based on the Signal protocol.

SpaceHost is a new managed hosting service for the fediverse, which donates a portion of net revenue to the software developers. It is still in early access, and starts with providing Lemmy and Firefish managed hosting.

Cloudflare’s ActivityPub server Wildebeest is no longer being maintained, according to their GitHub.

Community

Nivenly, the cooperative behind Mastodon server hachyderm.io, is having a community discussion and vote on how to approach distributed generative AI system. The blog Nexus of Privacy has an extensive writeup on the discussion and arguments within the community. The follow-up comment by author Jon points to the reasons why I’m linking to this: Community governance efforts are hard, and it’s worth learning from others how they have approached community governance.

The Lemmy developers will host an Ask Me Anything on Monday August 7th, 15u CEST. The thread is already open to post questions in advance. The fediverse does not have a great mode of communication between developers and users, with communication either often happening on Github/Codeberg, or in random comment sections. Providing a more structured place for people to hear more from the developers is a good direction to go in.

What I’ve been reading:

Mastodon’s Mastodon’ts. An essay on “how Mastodon posts work are terrible vectors for abuse, as well as being bad for basic usability.” To me, the lack of ability to remove replies on a post you’ve made is a significant barrier for institutions to adopt the fediverse. Harmful and racist replies can stay up if the admin of another server will not act upon a report, while a block does not prevent other people from seeing the reply. With the renewed interest of news organisations and governments into setting up a presence of the fediverse, it seems likely that this issue will become more pressing.

we also have the most comments. never stop posting

we also have the most comments. never stop posting

timeline.

timeline.

that I'd like to reply to, but I find that it only shows up on lemmy.ml and not here. Is that expected behaviour?

that I'd like to reply to, but I find that it only shows up on lemmy.ml and not here. Is that expected behaviour?