The worst is in the workplace. When people routinely tell me they looked something up with AI, I now have to assume that I can't trust what they say anylonger because there is a high chance they are just repeating some AI halucination. It is really a sad state of affairs.

I am way less hostile to Genai (as a tech) than most and even I've grown to hate this scenario. I am a subject matter expert on some things and I've still had people trying to waste my time to prove their AI hallucinations wrong.

I've started seeing large AI generated pull requests in my coding job. Of course I have to review them, and the "author" doesn't even warn me it's from an LLM. It's just allowing bad coders to write bad code faster.

I feel the same way. I was talking with my mom about AI the other day and she was still on the "it's not good that AI is trained on stolen images, how it's making people lazy and taking jobs away from ppl" which is good, but I had to explain to her how much one AI prompt costs in energy and resources, how many people just mindlessly make hundreds of prompts a day for largely stupid shit they don't need and how AI hallucinates, is actively used by bad actors to spread mis- and disinformation and how it is literally being implemented into search engines everywhere so even if you want to avoid it as a normal person, you may still end up participating in AI prompting every single fucking time you search for anything on Google. She was horrified.

There definitely are some net positives to AI, but currently the negatives outweigh the positives and most people are not using AI responsibly at all. I have little to no respect for people who use AI to make memes or who use it for stupid everyday shit that they could have figured out themselves.

The most dystopian shit I have seen recently was when my boyfriend and I went to watch Weapons in cinema and we got an ad for an AI assistent. The ad is basically this braindead bimbo at a laundry mat deciding to use AI to tell her how to wash her clothes instead of looking at the fucking flips on her clothes and putting two and two together. She literally takes a picture of the flip and has the AI assistent tell her how to do it and then going "thank you so much, I could have never done this without you".

I fucking laughed in the cinema. Laughed and turned to my boyfriend and said: this is so fucking dystopian, dude.

I feel insane for seeing so many people just mindlessly walking down this path of utter retardation. Even when you tell them how disastrous it is for the planet, it doesn't compute in their heads because it is not only convenient to have a machine think for you. It's also addictive.

You are not correct about the energy use of prompts. They are not very energy intensive at all. Training the AI, however, is breaking the power grid.

Maybe not an individual prompt, but with how many prompts are made for stupid stuff every day, it will stack up to quite a lot of CO2 in the long run.

Not denying the training of AI is demanding way more energy, but that doesn't really matter as both the action of manufacturing, training and millions of people using AI amounts to the same bleak picture long term.

Considering how the discussion about environmental protection has only just started to be taken seriously and here they come and dump this newest bomb on humanity, it is absolutely devastating that AI has been allowed to run rampant everywhere.

According to this article, 500.000 AI prompts amounts to the same CO2 outlet as a

round-trip flight from London to New York.

I don't know how many times a day 500.000 AI prompts are reached, but I'm sure it is more than twice or even thrice. As time moves on it will be much more than that. It will probably outdo the number of actual flights between London and New York in a day. Every day. It will probably also catch up to whatever energy cost it took to train the AI in the first place and surpass it.

Because you know. People need their memes and fake movies and AI therapist chats and meal suggestions and history lessons and a couple of iterations on that book report they can't be fucked to write. One person can easily end up prompting hundreds of times in a day without even thinking about it. And if everybody starts using AI to think for them at work and at home, it'll end up being many, many, many flights back and forth between London and New York every day.

I had the discussion regarding generated CO2 a while ago here, and with the numbers my discussion partner gave me, the calculation said that the yearly usage of ChatGPT is appr. 0.0017% of our CO2 reduction during the covid lockdowns - chatbots are not what is kiling the climate. What IS killing the climate has not changed since the green movement started: cars, planes, construction (mainly concrete production) and meat.

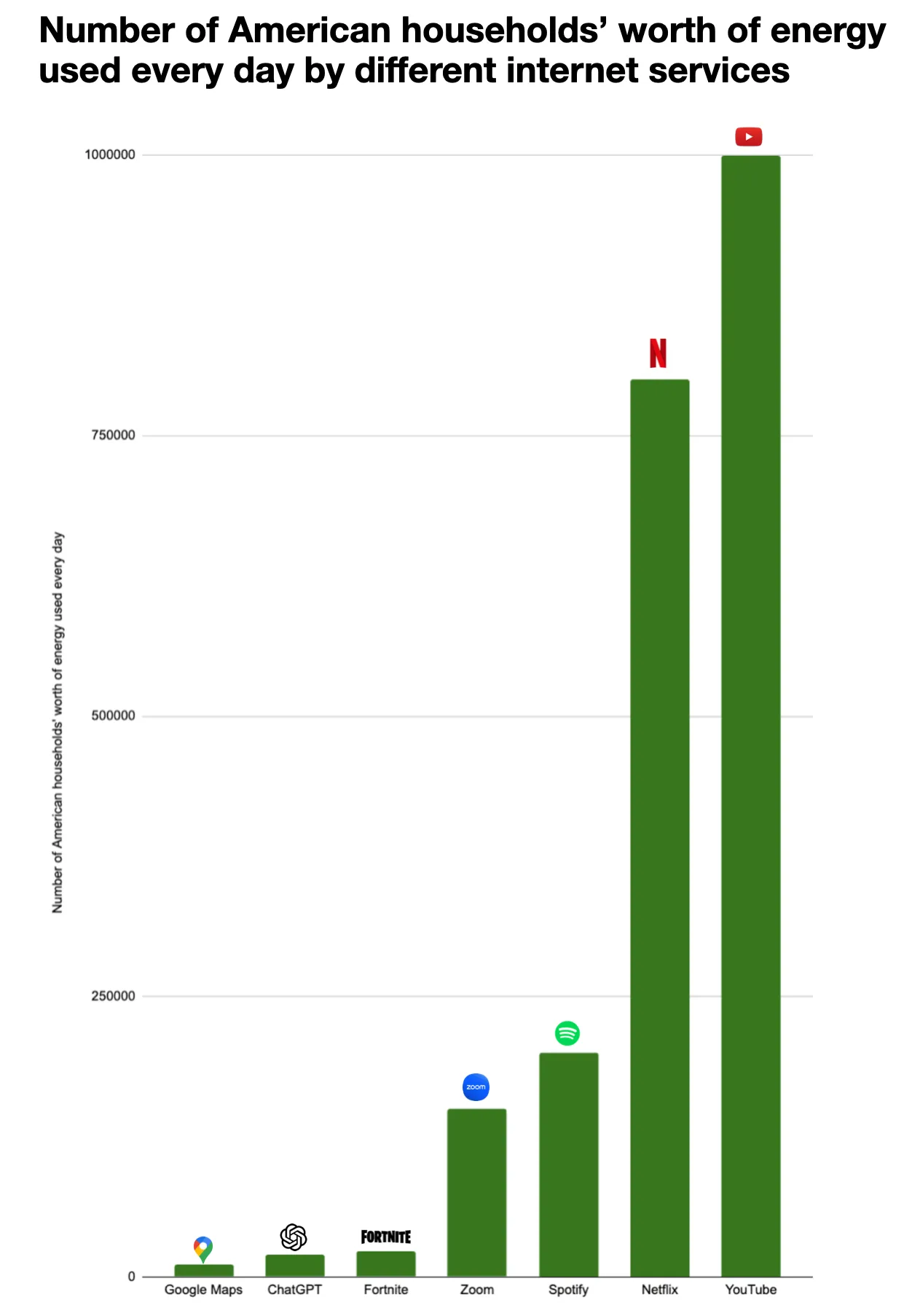

The exact energy costs are not published, but 3Wh / request for ChatGPT-4 is the upper limit from what we know (and thats in line with the appr. power consumption on my graphics card when running an LLM). Since Google uses it for every search, they will probably have optimized for their use case, and some sources cite 0.3Wh/request for chatbots - it depends on what model you use. The training is a one-time cost, and for ChatGPT-4 it raises the maximum cost/request to 4Wh. That's nothing. The combined worldwide energy usage of ChatGPT is equivalent to about 20k American households. This is for one of the most downloaded apps on iPhone and Android - setting this in comparison with the massive usage makes clear that saving here is not effective for anyone interested in reducing climate impact, or you have to start scolding everyone who runs their microwave 10 seconds too long.

Even compared to other online activities that use data centers ChatGPT's power usage is small change. If you use ChatGPT instead of watching Netflix you actually safe energy!

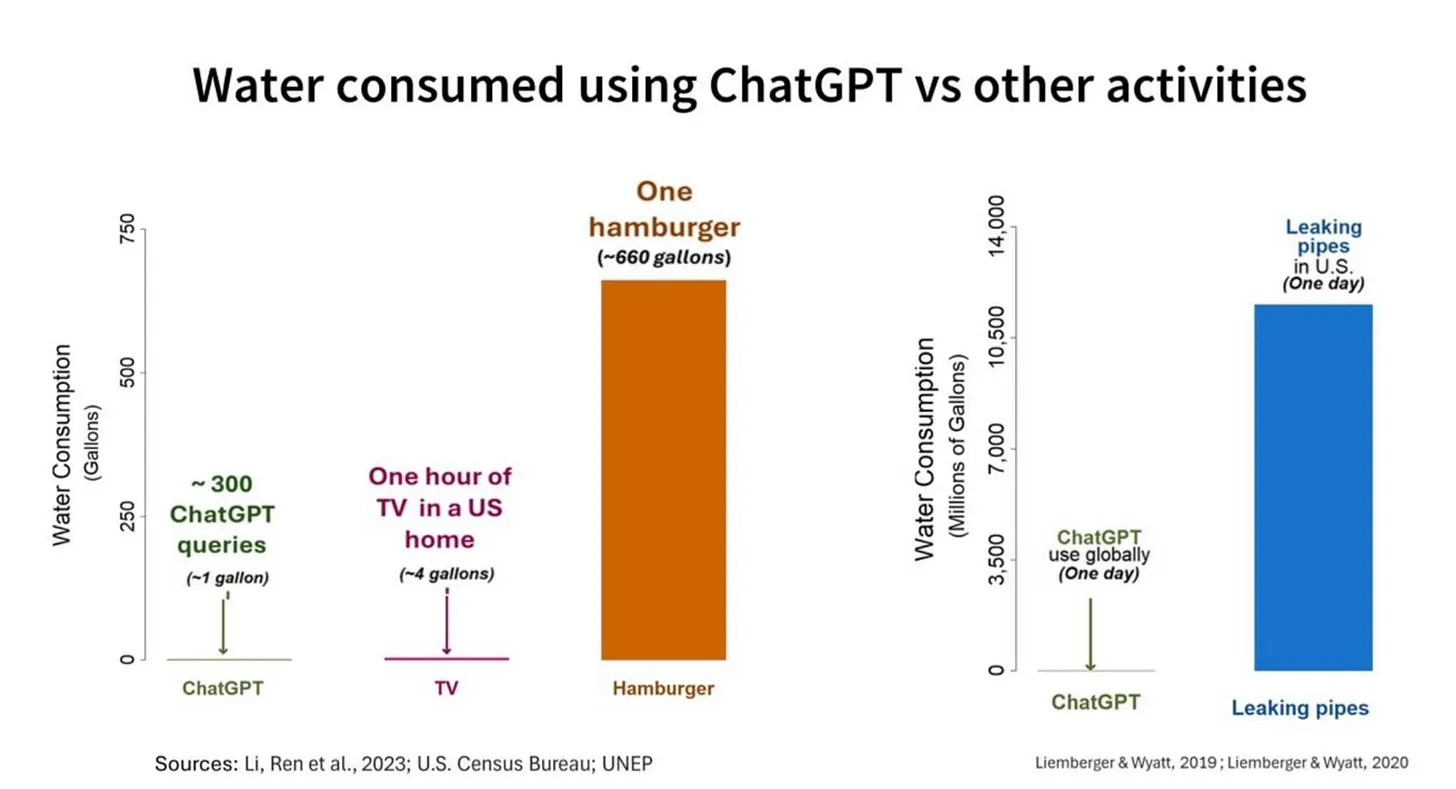

Water is about the same, although the positioning of data centers in the US sucks. The used water doesn't disappear tho - it's mostly returned to the rivers or is evaporated. The water usage in the US is 58,000,000,000,000 gallons (220 Trillion Liters) of water per year. A ChatGPT request uses between 10-25ml of water for cooling. A Hamburger uses about 600 galleons of water. 2 Trillion Liters are lost due to aging infrastructure. If you want to reduce water usage, go vegan or fix water pipes.

Read up here!

It's important to remember that there's a lot of money being put into A.I. and therefore a lot of propaganda about it.

This happened with a lot of shitty new tech, and A.I. is one of the biggest examples of this I've known about.

All I can write is that, if you know what kind of tech you want and it's satisfactory, just stick to that. That's what I do.

Don't let ads get to you.

First post on a lemmy server, by the way. Hello!

There was a quote about how Silicon Valley isn't a fortune teller betting on the future. It's a group of rich assholes that have decided what the future would look like and are pushing technology that will make that future a reality.

Welcome to Lemmy!

Classic Torment Nexus moment over and over again really

Reminds me of the way NFTs were pushed. I don’t think any regular person cared about them or used them, it was just astroturfed to fuck.

My boss had GPT make this informational poster thing for work. Its supposed to explain stuff to customers and is rampant with spelling errors and garbled text. I pointed it out to the boss and she said it was good enough for people to read. My eye twitches every time I see it.

good enough for people to read

wow, what a standard, super professional look for your customers!

I'm mostly annoyed that I have to keep explaining to people that 95% of what they hear about AI is marketing. In the years since we bet the whole US economy on AI and were told it's absolutely the future of all things, it's yet to produce a really great work of fiction (as far as we know), a groundbreaking piece of software of it's own production or design, or a blockbuster product that I'm aware of.

We're betting our whole future on a concept of a product that has yet to reliably profit any of its users or the public as a whole.

I've made several good faith efforts at getting it to produce something valuable or helpful to me. I've done the legwork on making sure I know how to ask it for what I want, and how I can better communicate with it.

But AI "art" requires an actual artist to clean it up. AI fiction requires a writer to steer it or fix it. AI non-fiction requires a fact cheker. AI code requires a coder. At what point does the public catch on that the emperor has no clothes?

it's yet to produce a really great work of fiction (as far as we know), a groundbreaking piece of software of it's own production or design, or a blockbuster product

Or a profit. Or hell even one of those things that didn’t suck! It’s critically flawed and has been defying gravity on the coke-fueled dreams of silicon VC this whole time.

And still. One of next year’s fiscal goals is “AI”. That’s all. Just “AI”.

It’s a goal. Somehow. It’s utter insanity.

The goal is "[Replace you money-needing meatsacks with] AI" but the suits don't want to say it that clearly.

Anyone in engineering knows the 90% of your goal is the easy bit. You’ll then spend 90% of your time on the remainder. Same for AI and getting past the uncanny valley with art.

There's a monster in the forest, and it speaks with a thousand voices. It will answer any question, and offer insight to any idea. It knows no right or wrong. It knows not truth from lie, but speaks them both the same. It offers its services freely, many find great value. But those who know the forest well will tell you that freely offered does not mean free of cost. For now the monster speaks with a thousand and one voices, and when you see the monster it wears your face.

Not just you. Ai is making people dumber. I am frequently correcting the mistakes of my colleagues that use.

My pet peeve: "here's what ChatGPT said..."

No.

Stop.

If I'd wanted to know what the Large Lying Machine said, I would've asked it.

It's like offering unsolicited advice, but it's not even your own advice

People are overworked, underpaid, and struggling to make rent in this economy while juggling 3 jobs or taking care of their kids, or both.

They are at the limits of their mental load, especially women who shoulder it disproportionately in many households. AI is used to drastically reduce that mental load. People suffering from burnout use it for unlicensed therapy. I'm not advocating for it, I'm pointing out why people use it.

Treating AI users like a moral failure and disregarding their circumstances does nothing to discourage the use of AI. All you are doing is enforcing their alienation of anti-AI sentiment.

First, understand the person behind it. Address the root cause, which is that AI companies are exploiting the vulnerabilities of people with or close to burnout by selling the dream of a lightened workload.

It's like eating factory farmed meat. If you have eaten it recently, you know what horrors go into making it. Yet, you are exhausted from a long day of work and you just need a bite of that chicken to take the edge off to remain sane after all these years. There is a system at work here, greater than just you and the chicken. It's the industry as a whole exploiting consumer habits. AI users are no different.

No, it's not just you or unsat-and-strange. You're pro-human.

Trying something new when it first comes out or when you first get access to it is novelty. What we've moved to now is mass adoption. And that's a problem.

These LLMs are automation of mass theft with a good enough regurgitation of the stolen data. This is unethical for the vast majority of business applications. And good enough is insufficient in most cases, like software.

I had a lot of fun playing around with AI when it first came out. And people figured out how to do prompts I cant seem to replicate. I don't begrudge people from trying a new thing.

But if we aren't going to regulate AI or teach people how to avoid AI induced psychosis then even in applications were it could be useful it's a danger to anyone who uses it. Not to mention how wasteful its water and energy usage is.

Regulate? This is what lead AI companies are pushing for, they would pass the bureaucracy but not the competitors.

The shit just needs to be forced to opensource. If you steal the content from entire world to build a thinking machine - give back to the world.

This would also crash the bubble and would slow down any of the most unethical for-profits.

Unfortunately the masses will do as they're told. Our society has been trained to do this. Even those that resist are playing their part.

On the contrary: society has repeatedly rejected a lot of ideas that industries have come up with.

HD DVD, 3D TV, Crypto Currency, NFT's, Laser Discs, 8-track tapes, UMD's. A decade ago everyone was hyping up how VR would be the future of gaming, yet it's still a niche novelty today.

The difference with AI is that I don't think I've ever seen a supply side push this strong before. I'm not seeing a whole lot of demand for it from individual people. It's "oh this is a neat little feature I can use" not "this technology is going to change my life" the way that the laundry machine, the personal motor vehicle, the telephone, or the internet did. I could be wrong but I think that as long as we can survive the bubble bursting, we will come out on the other side with LLM's being a blip on the radar. And one consequence will be that if anyone makes a real AI they will need to call it something else for marketing purposes because "AI" will be ruined.

AI's biggest business is (if not already, it will be) surveillance systems sold to authoritarian governments worldwide. Israel is using it in Gaza. It's both used internally and exported as a product by China. Not just cameras on street corners doing facial recognition, but monitoring the websites you visit, the things you buy, the people you talk to. AI will be used on large datasets like these to label people as dissidents, to disempower them financially, and to isolate them socially. And if the AI hallucinates in this endeavor, that's fine. Better to imprison 10 innocent men than to let 1 rebel go free.

In the meantime, AI is being laundered to the individual consumer as a harmless if ineffective toy. "Make me a portrait, give me some advice, summarize a meeting," all things it can do if you accept some amount of errors. But given this domain of problems it solves, the average person would never expect that anyone would use it to identify the first people to pack into train cars.

I must be one of the few reaming people that have never, and will never- type a sentence into an AI prompt.

I despise that garbage.

being anti-plastic is making me feel like i'm going insane. "you asked for a coffee to go and i grabbed a disposable cup." studies have proven its making people dumber. "i threw your leftovers in some cling film." its made from fossil fuels and leaves trash everywhere we look. "ill grab a bag at the register." it chokes rivers and beaches and then we act surprised. "ill print a cute label and call it recyclable." its spreading greenwashed nonsense. little arrows on stuff that still ends up in the landfill. "dont worry, it says compostable." only at some industrial facility youll never see. "i was unboxing a package" theres no way to verify where any of this ends up. burned, buried, or floating in the ocean. "the brand says advanced recycling." my work has an entire sustainability team and we still stock pallets of plastic water bottles and shrink wrapped everything. plastic cutlery. plastic wrap. bubble mailers. zip ties. everyone treats it as a novelty. every treats it as a mandatory part of life. am i the only one who sees it? am i paranoid? am i going insane? jesus fucking christ. if i have to hear one more "well at least" "but its convenient" "but you can" im about to lose it. i shouldnt have to jump through hoops to avoid the disposable default. have you no principles? no goddamn spine? am i the weird one here?

#ebb rambles #vent #i think #fuck plastics im so goddamn tired

If plastic was released roughly two years ago you'd have a point.

If you're saying in 50 years we'll all be soaking in this bullshit called gen-AI and thinking it's normal, well - maybe, but that's going to be some bleak-ass shit.

Also you've got plastic in your gonads.

you asked for thoughts about your character backstory and i put it into chat gpt for ideas

If I want ideas from ChatGPT, I could just ask it myself. Usually, if I'm reaching out to ask people's opinions, I want, you know, their opinions. I don't even care if I hear nothing back from them for ages, I just want their input.

No line breaks and capitalization? Can somebody ask AI to format it properly, please?

Everytime someone talks up AI, I point out that you need to be a subject matter expert in the topic to trust it because it frequently produces really, really convincing summaries that are complete and utter bullshit.

And people agree with me implicitly and tell me they've seen the same. But then don't hesitate to turn to AI on subjects they aren't experts in for "quick answers". These are not stupid people either. I just don't understand.

Meanwhile every company finds out the week after they lay off everyone that the billions they poured into their shitty "AI" to replace them might as well have been put in bags and set on fire

The reason AI is wrong so often is because it's not programmed to give you the right answer. It's programmed to give you the most pervasive one.

LLMs are being fed by Reddit and other forums that are ostensibly about humans giving other humans answers to questions.

But have you been on those forums? It's a dozen different answers for every question. The reality is that we average humans don't know shit and we're just basing our answers on our own experiences. We aren't experts. We're not necessarily dumb, but unless we've studied, our knowledge is entirely anecdotal, and we all go into forums to help others with a similar problem by sharing our answer to it.

So the LLM takes all of that data and in essence thinks that the most popular, most mentioned, most upvoted answer to any given question must be the de facto correct one. It literally has no other way to judge; it's not smart enough to cross reference itself or look up sources.

It literally has no other way to judge

It literally does NOT judge. It cannot reason. It does not know what "words" are. It is an enormous rainbow table of sentence probability that does nothing useful except fool people and provide cover for capitalists to extract more profit.

But apparently, according to some on here, "that's the way it is, get used to it." FUCK no.

Billionaires: invests heavily in water.

Billionaires: "In the future there's going to be water wars. You need to invest NOW! Quick before it's too late. I swear I'm not just trying to pump the stock."

Billionaires: "Water isn't accruing value fast enough. Let's invent a product that uses a shit ton of it!"

Billionaires: "No one likes or is using the product. Force them to. Include it in literally all software and every website. Make it so they're using the product even when they don't know they're using it. Include it in every web search. I want that water gone by the end of this quarter!"

The Luddites were right. Maybe we can learn a thing or two from them...

We have a lot of suboptimal aspects of our society like animal farming , war, religion etc. and yet this is what breaks this person's brain? It's a bit weird.

I'm genuinely sympathetic to this feeling but AI fears are so overblown and seems to be purely American internet hysteria. We'll absolutely manage this technology especially now that it appears that LLMs are fundamentally limited and will never achieve any form of AGI and even agentic workflow is years away from now.

Some people are really overreacting and everyone's just enabling them.

I'm putting a presentation on at work about the downsides of AI next month, please feed me. Together, we can stop the madness and pop this goddamn bubble.

My hope is that the ai bubble/trend might have a silver lining overall.

I’m hoping that people start realizing that it is often confidently incorrect. That while it makes some tasks faster, a person will still need to vet the answers.

Here’s the stretch. My hope is that by questioning and researching to verify the answers ai is giving them, people start applying this same skepticism to their daily lives to help filter out all the noise and false information that is getting shoved down their throats every minute of every day.

So that the populace in general can become more resistant to the propaganda. AI would effectively be a vaccine to boost our herd immunity to BS.

Like I said. It’s a hope.

The way I look at it is that I haven't heard anything about NFTs in a while. The bubble will burst soon enough when investors realize that it's not possible to get much better without a significant jump forward in computing technology.

We're running out of atomic room to make thing smaller just a little more slowly than we're running out of ways to even make smaller things, and for a computer to think like, as well as as quickly or faster than a person we need processing power to continue to increase exponentially per unit of space. Silicon won't get us there.

Microblog Memes

A place to share screenshots of Microblog posts, whether from Mastodon, tumblr, ~~Twitter~~ X, KBin, Threads or elsewhere.

Created as an evolution of White People Twitter and other tweet-capture subreddits.

Rules:

- Please put at least one word relevant to the post in the post title.

- Be nice.

- No advertising, brand promotion or guerilla marketing.

- Posters are encouraged to link to the toot or tweet etc in the description of posts.

Related communities: