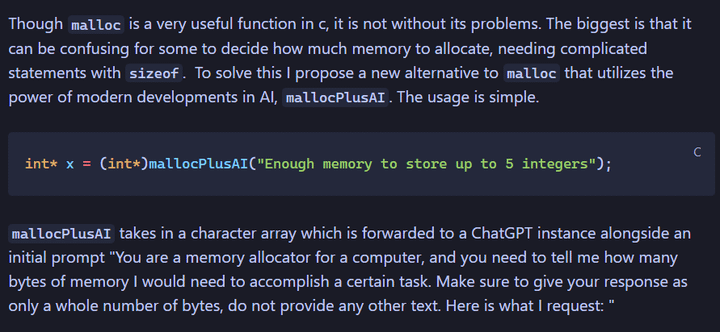

From my experience with ChatGPT:

- It will NEVER consistently give you only the value in the response. It will always eventually add in some introductory text like it’s talking to a human. No matter how many times I tried to get it to just give me back the answer alone, it never consistently did.

- ChatGPT is terrible with numbers. It can’t count, do math, none of that. So asking it to do byte math is asking for a world of hurt.

If this isn’t joke code, that is scary.