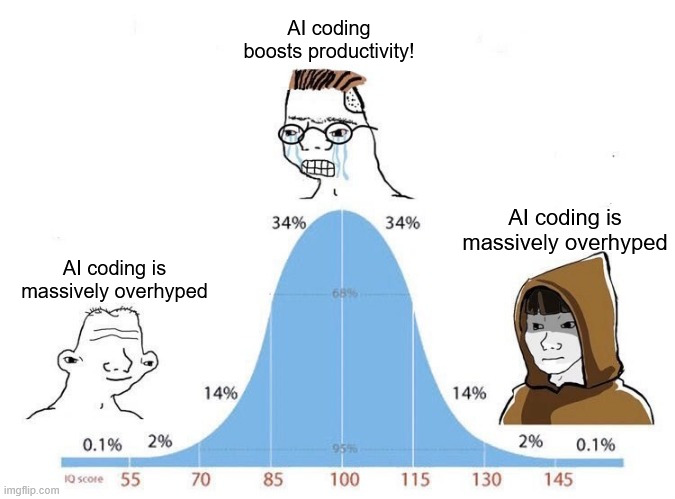

Even though this shit was apparent from day fucking 1, at least the Tech Billionaires were able to cause mass layoffs, destroy an entire generation of new programmers' careers, introduce an endless amount of tech debt and security vulnerabilities, all while grifting investors/businesses and making billions off of all of it.

Sad excuses for sacks of shit, all of them.