Ah yes, ph.d intelligence, but the wisdom of a toddler.

mmm, unironically sounds like me. According to my iq test i had PhD level intelligence at 18, and what am i doing at 24? unemployed, playing video games, and crying

It's alright, you can keep going for a bit, I'm about to hit 30 playing video-games and crying

but the wisdom of a toddler.

Sounds like an improvement to me lol

Book Smart Street Smart

PhD level intelligence? Sounds about right.

Extremely narrow field of expertise ✔️

Misplaced confidence in its abilities outside its area of expertise ✔️

A mind filled with millions of things that have been read, and near zero from interactions with real people✔️

An obsession over how many words can get published over the quality and correctness of those words ✔️

A lack of social skills ✔️

A complete lack of familiarity of how things work in the real world ✔️

"Never have I been so offended by something I 100% agree with!"

Translation: GPT-5 will (most likely illegally) be fed academic papers that are currently behind a paywall

I guess then we would be able to tell it to recite a paper for free and it may do it.

Or hallucinate it, did you know that large ammounts of arsenic can cure cancer and the flu?

I mean, GPT 3.5 consistently quotes my dissertation and conference papers back to me when I ask it anything related to my (extremely niche, but still) research interests. It’s definitely had access to plenty of publications for a while without managing to make any sense of them.

Alternatively, and probably more likely, my papers are incoherent and it’s not GPT’s fault. If 8.0 gets tenure track maybe it will learn to ignore desperate ramblings of PhD students. Once 9.0 gets tenured though I assume it will only reference itself.

NFTs will keep their value forever...

Will GPT-7 then be a burntout startup founder?

Ah. The synchronicity.

Wow... They want to give AI even more mental illness and crippling imposter syndrome to make it an expert in one niche field?

Sounds like primary school drop-out level thinking to me.

I'm planning to defend in October and I can say that getting a Ph.D. is potentially the least intelligent thing I've ever done.

It would have to actually have intelligence, period, for it to have PhD level intelligence. These things are not intelligent. They just throw everything at the wall and see what would stick.

You are correct, but there's a larger problem with intelligence, we don't have a practical definition, and we keep shifting the goalpost. Then there's always a question of a philosophical zombie, if someone acts as a human and has a human body you won't be able to tell apart if they don't really have intelligence, so we only need to put LLM into humanlike body (it's not so, but you get the point)

reminds me of this, although the comic is on a different matter

All aboard the hype train! We need to stop using the term "AI" for advanced auto complete. There is not even a shred of intelligence in this. I know many of the people here already know this, but how do we get this message to journalists?! The amount of hype being repeated by respectable journalists is sickening.

people have been calling literal pathfinding algorithms in video games AI for decades. This is what AI is now and I think it's going to be significantly easier to just accept this and clarify when talking about actual intelligence than trying to fight the already established language.

Too late, the journalists have been replaced by advanced auto completes already.

What is intelligence?

My organic neural network (brain) > yours (smooth brain)

I jest

GPT-7 will have full-self-driving.

But that's next year.

You know the Chineese? They talk about this ChatPT 7. But we Americans. My uncle, very smart man. Smartest in every room except on Thanks Giving. I always had Thanks Giving and my Turkey, everyone loved my Turkey. He said we will soon have Chat 8 and the Chineese they know nothing like it.

What a bunch of bullshit. I've asked ChatGPT recently to do a morphological analysis of some Native American language's very simple sentences, and it gave absolute nonsense as an answer.

And let's be clear: It was an elementary linguistics task. Something that I did learn to do on my own by just doing a free course online.

So copying everyone else’s work and rehashing it as your own is what makes a PhD level intelligence? (Sarcastic comments about post-grad work forthcoming, I’m sure)

Unless AI is able to come up with original, testable, verifiable, repeatable previously unknown associations, facts, theories, etc. of sufficient complexity it’s not PhD level…using big words doesn’t count either.

Having a PhD doesn’t say you’re intelligent. It says you’re determined & hardworking.

Eh. Maybe. but don’t discount those phds who were pushed through the process because their advisors were just exhausted by them. i have known too many 10th year students. They weren’t determined or hardworking. They simply couldn’t face up to their shit decisions, bad luck, or intellectual limits.

I like how they have no road map on how to achieve general artificial intelligence (apart from lets train LLMs with a gazillion parameters and the equivalent of yearly energy consumed by ten large countries) but yet pretend chatgpt 4 is only two steps away from it

Hard to make a roadmap when people can't even agree on what the destination is not how to get there.

But if you have enough data on how humans react to stimulus, and you have a good enough model, then you will be able to train it to behave exactly like a human. The approach is sound even though in practice there prooobably doesn't exist enough usable training data in the world to reach true AGI, but the models are already good enough to be used for certain tasks

the only thing this chatbot will be able to simulate is unreasonable persistence

Is it weird that I still want to go for my PhD despite all the feedback about the process? I don’t think I’ve ever met a PhD or candidate that’s enthusiastically said “do it!”

No, not weird at all. PhD's are pain, but certain people like the pain. If you're good with handling stress, and also OK with working in a fast-paced, high-impact environment (for real, not business talk BS), then it may be the right decision for you. The biggest thing that I would say is that you should really, really think about whether this is what you want, since once you start a PhD, you've locked the next 6 years of your life into it with no chance of getting out

Edit: Also, you need to have a highly sensitive red-flag radar. As a graduate student, you are highly susceptible to abuse from your professor. There is no recourse for abuse. The only way to avoid abuse is by not picking an abusive professor from the get-go. Which is hard, since professors obviously would never talk badly about themselves. Train that red-flag radar, since you'll need to really read between every word and line to figure out if a professor is right for you

It’s a lot of fucking work. If you enjoy hard work, learning about the latest advancements in your field, and can handle disappointment / criticism well, then it’s something to look into.

that and if you can find lab/group with recent publications and funding. not sticking too hard to failed ideas also helps

I generally tell people the only reason to do it is if your career pursuits require it, and even then I warn them away unless they're really sure. Not every research advisor is abusive, but many are. Some without even realizing it. I ended up feeling like nothing more than a tool to pump up my research advisor's publication count.

It was so disillusioning that I completely abandoned my career goal of teaching at a university because I didn't want to go anywhere near that toxic culture again. Nevertheless, I did learn some useful skills that helped me pivot to another career earning pretty good money.

So I guess I'm saying it's a really mixed bag. If you're sure it's what you want, go for it. But changing your mind is always an option.

no it's not. but you should know what you're getting into.

in the beginning of my PhD i really loved what i was doing. from an intellectually point of view i still do. but later, i.e. after 3 years doing a shitty postdoc, i realized that I was not cut out for academia but nevertheless loved doing science.

however, i was lucky to find a place in industry doing what i like.

so i guess my 2c is: think about what comes after the PhD and work towards that goal. a PhD is usually not a goal in itself. hth

It's like being drafted to a war while you only receive vague orders and you slowly realize what the phrase "war is a racket" means. You suffer and learn things that you didn't plan on learning.

The fact that I have a PhD while I knew that I wouldn't use it quickly after I begun, thus loosing years of my life is the proof that I'm dumb as a rock. Fitting for ChatGPT.

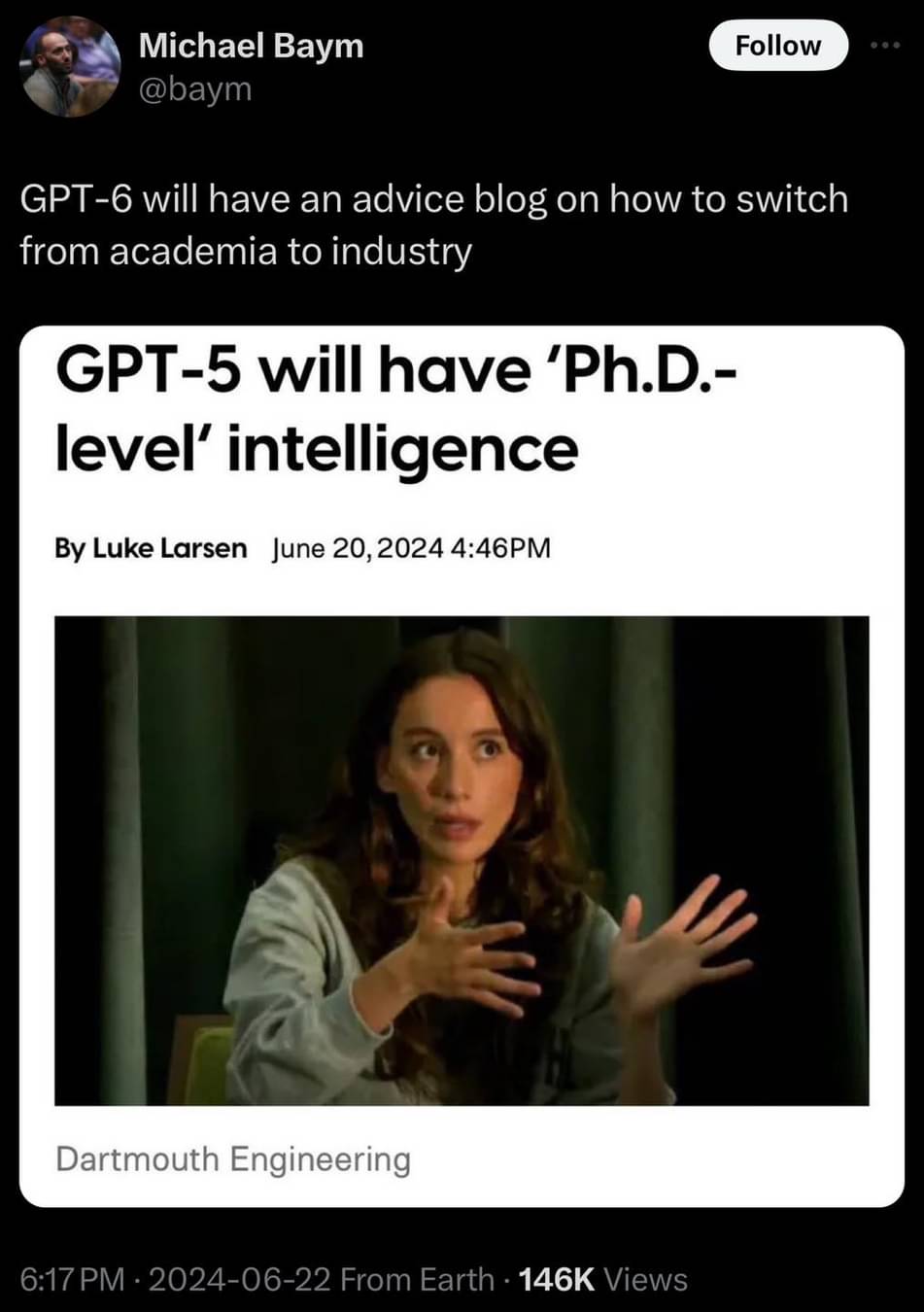

Oh... that's the same person (in the image at least) who said "Yeah AI is going to take those creative jobs, but those jobs maybe shouldn't have existed in the first place".

Which GPT will take my job? I would imagine it's only a year out, at the most.

Then what? I leave my tech job and go find menial labor?

Fuck our government for not laying down rules on this. I knew it would happen, but goddamn...

sigh

I'm so tired of repeating this ad nauseum. No, it's not going to take your job. It's hype train bullshit full of grifters. There is no intelligence or understanding, nor have we come anywhere close to achieving that. That is still entirely within the realm of science fiction.

ChatGPT is already taking people’s jobs. You overestimate the complexity of what some people get paid for.

GenerativeAI cannot do anything on its own. However, it is a productivity amplifier in the right hands. What those “more productive” people do is reduce the demand for other labour.

Chatbots are performing marketing communication, marketing automation, cloud engineering, simple coding, recruitment screening, tech support, security monitoring, editorial content and news, compliance verification, lead development, accounting, investor relations, visual design, tax preparation, curriculum development, management consulting, legal research, and more. Should it be? Many ( I am guessing you ) would argue no. Is it though? Absolutely.

All of the above is happening now. This train is going to accelerate before it hits equilibrium. The value of human contribution is shifting but not coming back to where it was.

Jobs will be created. Jobs are absolutely being lost.

You are correct that ChatGPT is not intelligent. You are right that it does not “understand” anything. What does that have to do with taking people’s jobs? There are many, many jobs where intelligence and understanding are under-utilized or even discouraged. Boiler-plate content creation is more common than you think.

People have the wrong idea about how advanced AI has to be to take people’s jobs.

The loom was not intelligent. It did not “understand” weaving. It still eliminated so many jobs that human society was altered forever and so significantly that we are still experiencing the effects.

As an analogy ( not saying this is how the world will choose to go ), you do not need a self-driving car that is superior to humans in all cases in order for Uber to eliminate drivers. If the AI can handle 95% of cases, you need 5 drivers for 100 cars. They can monitor, supervise, guide, and fully take over when required.

Many fields will be like this. I do not need an AI with human level intelligence to get rid of the Marcom dept. I need one really skilled person to drive 6 people’s worth of output using AI. How many content creators and headline writers do I need to staff an online “news” room? The lack of person number two may surprise you.

Getting rid of jobs is not just a one for one replacement of every individual with a machine. It is a systemic reduction in demand. It is a shifting of geographic dependence.

Many of the tasks we all do are less novel and high-quality than we think they are. Many of us can be “largely” replaced and that is all it takes. We may not lose our jobs but there will certainly be many fewer new jobs in certain areas than there would have been.

Science Memes

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- !abiogenesis@mander.xyz

- !animal-behavior@mander.xyz

- !anthropology@mander.xyz

- !arachnology@mander.xyz

- !balconygardening@slrpnk.net

- !biodiversity@mander.xyz

- !biology@mander.xyz

- !biophysics@mander.xyz

- !botany@mander.xyz

- !ecology@mander.xyz

- !entomology@mander.xyz

- !fermentation@mander.xyz

- !herpetology@mander.xyz

- !houseplants@mander.xyz

- !medicine@mander.xyz

- !microscopy@mander.xyz

- !mycology@mander.xyz

- !nudibranchs@mander.xyz

- !nutrition@mander.xyz

- !palaeoecology@mander.xyz

- !palaeontology@mander.xyz

- !photosynthesis@mander.xyz

- !plantid@mander.xyz

- !plants@mander.xyz

- !reptiles and amphibians@mander.xyz

Physical Sciences

- !astronomy@mander.xyz

- !chemistry@mander.xyz

- !earthscience@mander.xyz

- !geography@mander.xyz

- !geospatial@mander.xyz

- !nuclear@mander.xyz

- !physics@mander.xyz

- !quantum-computing@mander.xyz

- !spectroscopy@mander.xyz

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and sports-science@mander.xyz

- !gardening@mander.xyz

- !self sufficiency@mander.xyz

- !soilscience@slrpnk.net

- !terrariums@mander.xyz

- !timelapse@mander.xyz