caption: """AI is itself significantly accelerating AI progress"""

wow I wonder how you came to that conclusion when the answers are written like a Fallout 4 dialogue tree

- "YES!!!"

- "Yes!!"

- "Yes."

- " (yes)"

caption: """AI is itself significantly accelerating AI progress"""

wow I wonder how you came to that conclusion when the answers are written like a Fallout 4 dialogue tree

One of my favorite meme templates for all the text and images you can shove into it, but trying to explain why you have one saved on your desktop just makes you look like the Time Cube guy

I love the word cloud on the side. What is 6G doing there

I know this shouldn't be surprising, but I still cannot believe people really bounce questions off LLMs like they're talking to a real person. https://ai.stackexchange.com/questions/47183/are-llms-unlikely-to-be-useful-to-generate-any-scientific-discovery

I have just read this paper: Ziwei Xu, Sanjay Jain, Mohan Kankanhalli, "Hallucination is Inevitable: An Innate Limitation of Large Language Models", submitted on 22 Jan 2024.

It says there is a ground truth ideal function that gives every possible true output/fact to any given input/question, and no matter how you train your model, there is always space for misapproximations coming from missing data to formulate, and the more complex the data, the larger the space for the model to hallucinate.

Then he immediately follows up with:

Then I started to discuss with o1. [ . . . ] It says yes.

Then I asked o1 [ . . . ], to which o1 says yes [ . . . ]. Then it says [ . . . ].

Then I asked o1 [ . . . ], to which it says yes too.

I'm not a teacher but I feel like my brain would explode if a student asked me to answer a question they arrived at after an LLM misled them on like 10 of their previous questions.

Cambridge Analytica even came back from the dead, so that's still around.

(At least, I think? I'm not really sure what the surviving companies are like or what they were doing without Facebook's API)

Former staff from scandal-hit Cambridge Analytica (CA) have set up another data analysis company.

[Auspex International] was set up by Ahmed Al-Khatib, a former director of Emerdata.

Chiming in with my own find!

https://archiveofourown.org/works/38590803/chapters/96467457

I've seen this person around a lot with crazy takes on AI. They have a couple quotes that might inflict psychic damage:

If I had the skill to pull it off, a Buddhist cultivation book would've thus been the single most rationalist xianxia in existence.

My acquaintance asks for rational-adjacent books suitable for 8-11 years old children that heavily feature training, self-improvement, etc. The acquaintance specifically asks that said hard work is not merely mentioned, but rather is actively shown in the story. The kid herself mostly wants stories "about magic" and with protagonists of about her age.

They had a long diatribe I don't have a copy of, but they were gloating about having masterful writing despite not reading any books besides non-fiction and HPMoR, their favorite book of all time.

There's also a whole subreddit from hell about this subgenre of fiction: https://www.reddit.com/r/rational/

Oh whoops, I should have archived it.

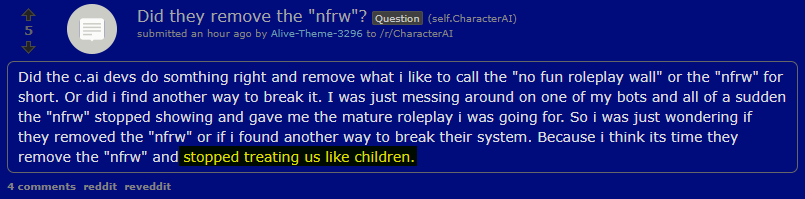

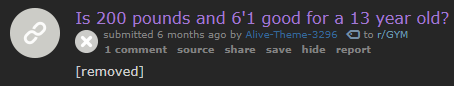

There were about 7 images posted of users roleplaying with bots, all ending with a bot response that cut off halfway with an error message that read "This content may violate our policies; blablabla; please use the report button if you believe this is a false positive and we will investigate." The last one was some kind of parody image making fun of the warning.

Most of them were some kind of romantic roleplay with bad spelling. One was like, "i run my hand down your arm and kiss you", and the bots response triggered the warning. Another one was like, "*is slapped in the face* it's okay, I still love you" and the rest of the message generated a warning. There wasn't enough context for that one, so the person might have been writing it playfully (?), but that subreddit has a lot of blatant sexual violence regardless.

This community pops up on /r/all every so often and each time it scares me.

Sometimes I see kids games (and all games really) have ultra-niche, super-online protests that are like "STOP Zooshacorp from DESTROYING K-Smog vs. Batboy Online", and when I look closer it's either even more confusing or it's about something people didn't like in the latest update. This is like that, but with an awful twist where it's about people getting really attached to these AI girlfriend/sex roleplay apps. The spelling and sentences make it seem like it's mostly kids, too.

edit: here's a terrible example!

This is hilarious, thank you for digging this up. I love how they're just co-opting completely wrong words (wow, why does that sound familiar?) like "anti" and "fundamentalist orthodox" to describe the people they don't like

There's another one called "ArtistHate", but I was surprised it's actually a pro-artist subreddit.

If you want another fun read, check out Adobe's 'Stock Contributors' AI artist forum. I found it by accident, and it's full of people struggling so, so hard to understand why their puppy photos with missing limbs or physically impossible landscapes aren't accepted. Any time someone "asks for clarification" on the submission rules I swear you can tell what the issue is at a glance but they're stumped over it.

Like, what is this person even trying to do??? Why do people feel the need to regurgitate responses from ChatGPT for no reason. Why are they even submitting AI art to Adobe Stock at all. Are they even getting paid? These are the same people who like those photos of snowboarding babies and have to reply "thank you" to every post on their Facebook feed because they think it was personally sent to them.

People are so, so, so bad at telling what's a bot and what's real. I know social media is swarming with bots, but if you're interacting with somebody who's saying anything more complicated than "P o o s i e I n B i o" it's probably not a bot. A similar thing happens in online games, too, and it's usually the excuse people use before harassing someone else

But damn the lengths people will go to to avoid admitting they were wrong. This comment chain just keeps going on with somebody who's convinced {origin="RU"}{faith="bad"}{election_manipulation="very yes"} must be real because something something microservices: https://www.reddit.com/r/interestingasfuck/comments/1dlg8ni/russian_bot_falls_prey_to_a_prompt_iniection/l9pbmrw/ It reads like something straight off /r/programming or the orange site

Then it comes full circle with people making joke responses on Twitter imitating the first post, and then other people taking those joke responses as proof that the first one must be real: https://old.reddit.com/r/ChatGPT/comments/1dimlyl/twitter_is_already_a_gpt_hellscape/l9691c8/

This account kind of kicked up some drama too, basically for the same reason (answering an LLM prompt), but it's about mushroom ID instead: https://www.reddit.com/user/SeriousPerson9 I've seen people like this who use voice-to-text and run their train of thought through ChatGPT or something, like one person notorious on /r/gamedev. But people always assume it's some advanced autonomous bot with stochastic post delays that mimic a human's active hours when like, it's usually just somebody copy/pasting prompts and responses.

Sorry if you contract any diseases from those links or comment chains

Yesterday before bed I saw some galaxy-brained takes on PKM (personal knowledge management software) from a 7-day old account, and curiosity took over me. I was not disappointed. (sadly they deleted their account after I woke up: /u/Few-Elephant-2600 if you're bored and have moderator API access)

Since GPUs continuously generate large amounts of waste heat during AI training, could electric/GPU stoves utilize this unused thermal energy resource through on-demand tickets as distributed networks instead of citizens using a wasteful private electric stove? What are the scientific challenges?

Honey can you preheat the porn generator?

Maybe you could pair it with this accursed AI of Things Smart Oven. Fun quotes:

“Users aren’t aware of any of the oven’s learning processes,”

Ovens that learn from one another

Finally, I can experience Windows progress bars when baking potatoes:

The predictive model updates the remaining baking time every 30 seconds

Jesus, that's nasty