I remember when this guy used to castigate Sam Harris for platforming Charles Murray’s race science. The same guy who now eulogizes Charlie Kirk and does the bidding of billionaires. Really encapsulates the elite pivot to the right.

More of a pet peeve than a primal scream, but I wonder what's with Adam Tooze and his awe of AI. Tooze is a left-wing economic historian who’s generally interesting to listen to (though perhaps in tackling a very wide range of subject matter sometimes missing some depth), but nevertheless seems as AI-pilled as any VC. Most recently came about this bit: Berlin Forum on Global Cooperation 2025 - Keynote Adam Tooze

Anyone who’s used AI seriously knows the LLMs are extraordinary in what they’re able to do ... 5 years down the line, this will be even more transformative.

Really, anyone Adam? Are you sure about the techbro pitch there?

well, Zen buddhism

Yeah, this is the Valley after all. Some have used Buddhism as a building block for constructing “metarationality”.

The irony being that rather than writing good SCP themselves, they'd make for even better subjects of containment & study.

Wow, the term 'epistemically humble' is ingenious really. I don't have to listen to any critics at all, not because I'm a narcissist, oh no, but because I'm so 'epistemically humble' no one could possibly have anything left to teach me!

How much money would be saved by just funneling the students of these endless ‘AI x’ programs back to the humanities where they can learn to write (actually good) science fiction to their heart’s content? Hey, finally a way AI actually lead to some savings!

Bill Gates is having a normal one.

https://www.cnbc.com/2025/03/26/bill-gates-on-ai-humans-wont-be-needed-for-most-things.html

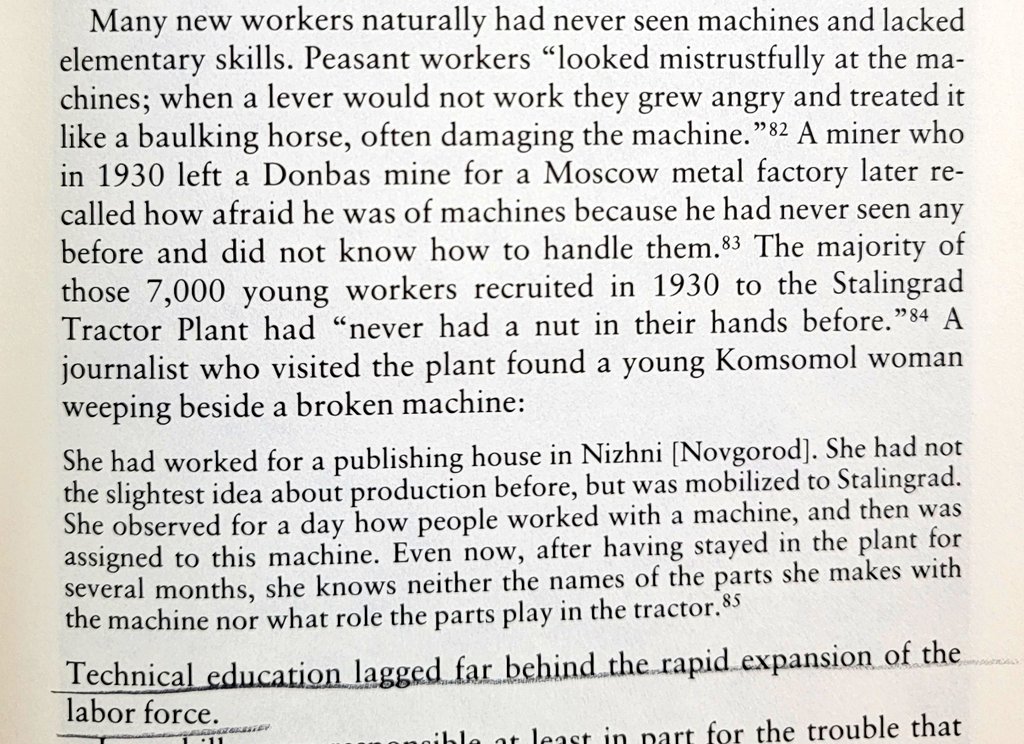

Reminds me of the stories of how Soviet peasants during the rapid industrialization drive under Stalin, who’d never before seen any machinery in their lives, would get emotional with and try to coax faulty machines like they were their farm animals. But these were Soviet peasants! What are structural forces stopping Yud & co outgrowing their childish mystifications? Deeply misplaced religious needs?

A generous interpretation may be that writing music in the context of the modern music industry may indeed be something that’s creatively unsatisfying for composers, but the solutions to that have nothing to do with magical tech-fixes and everything to do with politics, which is of course anathema to these types. What dumb times we live in.

Amazing quote he included from Tyler Cowen:

If you are ever tempted to cancel somebody, ask yourself “do I cancel those who favor tougher price controls on pharma? After all, they may be inducing millions of premature deaths.” If you don’t cancel those people — and you shouldn’t — that should broaden your circle of tolerance more generally.

Yes leftists, you not cancelling someone campaigning for lower drug prices is actually the same as endorsing mass murder and hence you should think twice before cancelling sex predators. It’s in fact called ephebophilia.

What the globe emoji followed with is also a classic example of rationalists getting mesmerized by their verbiage:

What I like about this framing is how it aims to recalibrate our sense of repugnance in light of “scope insensitivity,” a deeply rooted cognitive bias that occurs “when the valuation of a problem is not valued with a multiplicative relationship to its size.”

Where did you get that impression from? He says himself he is not advocating against aid per se, but that its effects should be judged more holistically, e.g. that organizations like GiveWell should also include the potential harms alongside benefits in their reports. The overarching message seems to be one of intellectual humility – to not lose sight that the ultimate aim is to help another human being who in the end is a person with agency just like you, not to feel good about yourself or to alleviate your own feelings of guilt.

The basic conceit of projects like EA is the incredible high of self-importance and moral superiority one can get blinded by when one views themselves as more important than other people by virtue of helping so many of them. No one likes to be condescended to; sure, a life saved with whatever technical fix is better than a life lost, but human life is about so much more than bare material existence – dignity and freedom are crucial to a good life. The ultimate aim should be to shift agency and power into the hands of the powerless, not to bask in being the white knight trotting around the globe, saving the benighted from themselves.

Rutger Bregman admits that he’s not sure what AGI actually is beyond vague utopian visions, but trivial questions aside, he’s sure it will revolutionize the world in 10 years.

For those who haven’t heard of him, he’s a Dutch historian who achieved some fame for his book arguing for UBI and reduced work weeks, as well as his critique of rich people avoiding taxes and a segment on Tucker Carlson’s show where he openly challenged his politics. He has since seemingly turned 180 degrees and become a billionaire-backed effective altruist.