they're going to try to write COBOL with AI. let's see how that works out

Surprised this hasn't been mentioned yet: https://www.rollingstone.com/culture/culture-news/meta-ai-users-facebook-instagram-1235221430/

Facebook and Instagram to add AI users. I'm sure that's what everyone has been begging for...

AIfu

That's gold. I like it.

Damn, HP doesn't mess around. I'm going to stop trashing them around the office.

I will never get tired of that saltman pic.

Looking forward to the LLM vs LLM PRs with hundreds of back and forth commit-request changes-commit cycles. Most of it just flipping a field between final and not final.

Wait, is this how Those People claim that Copilot actually “improved their productivity”? They just don’t fucking read what the machine output?

Yes, that's exactly what it is. That and boilerplate, but it probably makes all kinds of errors that they don't noticed, because the build didn't fail.

Skimmed the paper, but i don't see the part where the game engine was being played. They trained an "agent" to play doom using vizdoom, and trained the diffusion model on the agents "trajectories". But i didn't see anything about giving the agents the output of the diffusion model for their gameplay, or the diffusion model reacting to input.

It seems like it was able to generate the doom video based on a given trajectory, and assume that trajectory could be real time human input? That's the best i can come up with. And the experiment was just some people watching video clips, which doesn't track with the claims at all.

It turns AI really is going to try to wipe out the humans. Just, you know, indirectly through human activity trying to make it happen.

The only bright spot is that the new educational AI model doesn’t exist yet and there’s plenty of time for the whole project to go sideways before launch.

Do you really think that will stop them?

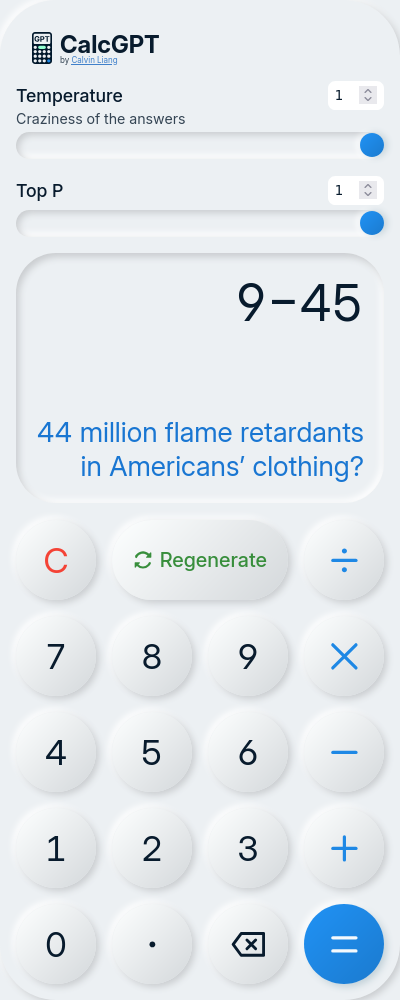

it cannot handle subtraction going negative

Ran across https://406.fail/ and had a chuckle.