* the background was taken from the Sky: Children of the Light Wiki

alt image link from blahaj zone

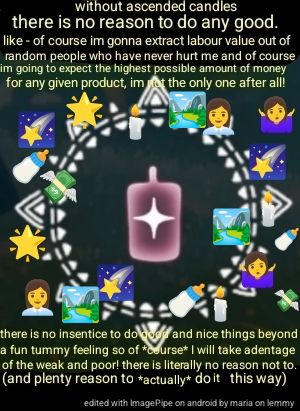

so anyway - I didnt post in quite a long while, so here I go yappin bout that one game i keep playin. there really is no reason to do good besides the good feels, and som peeps seem to not have that very much -..... so here we r.

imagine how fun and cool the world were if there would be a reason to play along nicely - like -... gosh, now that sounds pretty nice, hm? a world where slip-ups r oki - but bein systematically evil.,.. well - its actually bad for that peep.

but no! now instead, im considering stealing from supermarkets for my own benefit because there really is nothing holding me back from doing that. like - I dun wana! but if that somehow hurts the larger chain, im all here for it-.... iguess it doesnt hurt the larger chain so i should leave it,... but in larger - clearly profitable markets it feels fine-

feels so wrong.... or rather -.... doesnt feel so wrong anymore when u notice that all the big peeps r also bein evil. if that means that all the cool and nice peeps stay at the bottom which the cheaters and not-cool evils peeps get to rise, well like - that feels like the opposite of karma (or whatever that is called-)! theres clearly an inverted reward system happening here!

piracy and the like doesnt feel evil if the prices for watching the art legally is rising so sharply, but not to offer a better service, but to just.... make more möni.

CW: really agregiously rage-bait kinda stuff

this might sound really unlike a Lemmy user right here, but im actually not that opposed to a capitalist-like system, where exploitation and monopolies r somhow mitigated.... to be very clear: im from som country employing social democracy where most of the basic living this r cared for to some degree kinda for free, so i have more hope in that than a fellow 'merican 🇺🇸🍔🛢️🏚️🤑🔴🔵🪦🧀👨💼📈🔫 might think about it, cuz im actually experiencing som of those benefits.

but like -,... i mean.,. of course id prefer fully automated luxary gay space communism, but for that revolution to take place, there r too many peeps to still convince. maybe its a bit too early for that- maybe a basic "heyo, we kill more CEOs and hope good comes of it" is more applicable ~

oh yea ohgosh I just opened a whole can of worms I probably shouldnt have. plz,.... be kind in the comments....

ohgosh they got some... interestin namin schemas.... woag-