cross-posted from: https://infosec.pub/post/13676291

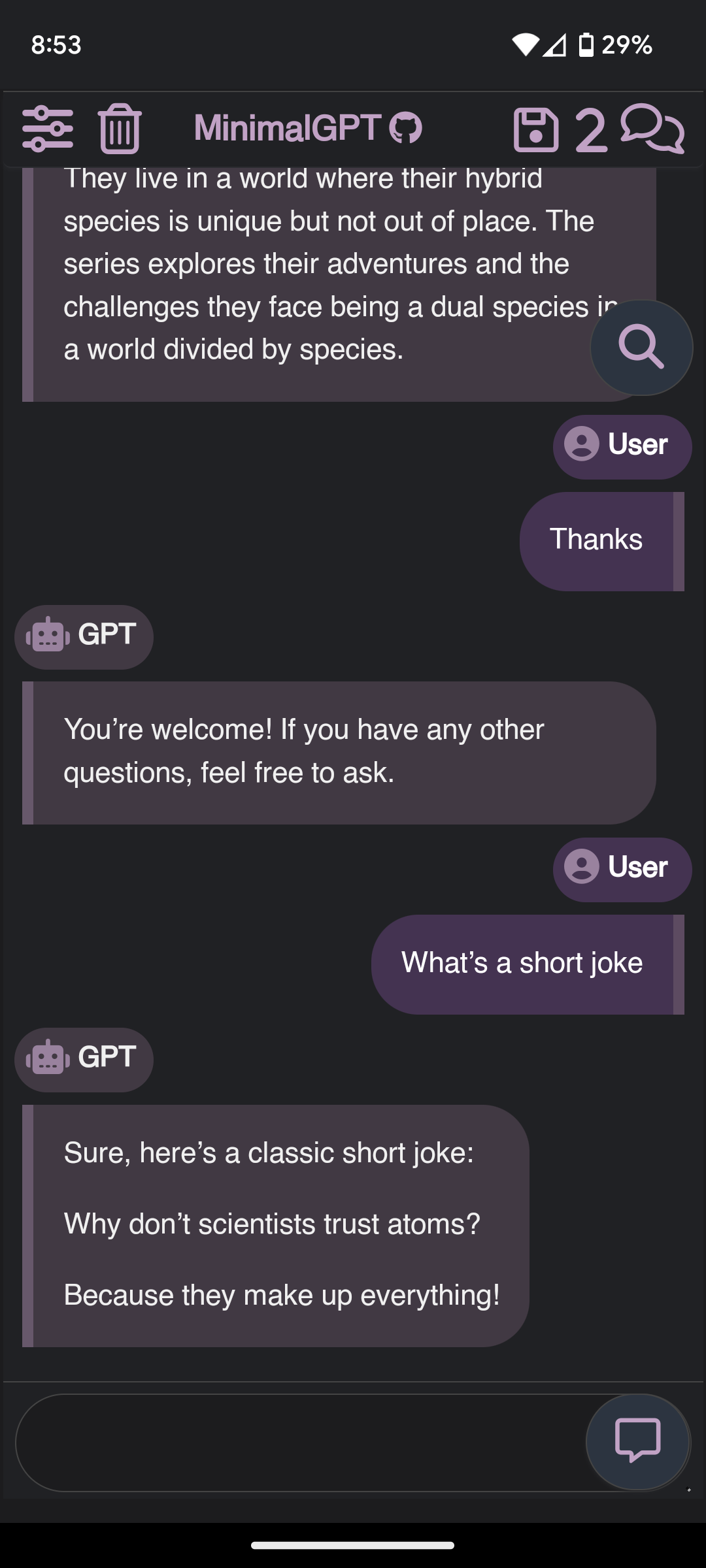

I've been building MinimalChat for a while now, and based on the feedback I've received, it's in a pretty decent place for general use. I figured I'd share it here for anyone who might be interested!

Quick Features Overview:

- Mobile PWA Support: Install the site like a normal app on any device.

- Any OpenAI formatted API support: Works with LM Studio, OpenRouter, etc.

- Local Storage: All data is stored locally in the browser with minimal setup. Just enter a port and go in Docker.

- Experimental Conversational Mode (GPT Models for now)

- Basic File Upload and Storage Support: Files are stored locally in the browser.

- Vision Support with Maintained Context

- Regen/Edit Previous User Messages

- Swap Models Anytime: Maintain conversational context while switching models.

- Set/Save System Prompts: Set the system prompt. Prompts will also be saved to a list so they can be switched between easily.

The idea is to make it essentially foolproof to deploy or set up while being generally full-featured and aesthetically pleasing. No additional databases or servers are needed, everything is contained and managed inside the web app itself locally.

It's another chat client in a sea of clients but it is unique in its own ways in my opinion. Enjoy! Feedback is always appreciated!

Self Hosting Wiki Section https://github.com/fingerthief/minimal-chat/wiki/Self-Hosting-With-Docker

I thought sharing here might be a good idea as well, some might find it useful!

I've added some updates since even the initial post which gave a huge improvement to message rendering speed as well as added a plethora of new models to choose from and load/run fully locally in your browser (Edge and Chrome) with WebGPU and WebLLM

That seems like a pretty naive and biased approach to software to me honestly.

Ease of use, community support, feature set, CI/CD etc..all should come into play when deciding what to use.

Freedom at all costs is great until you limit the community development and potential user base by 90% by using a completely open repo service that 5% of the population uses or some small discord alternative.

So then the option is to host on multiple platforms/communities and the management and time investment goes up keeping them in sync and active.

As with most things in life, it's best to look at things with nuance rather than a hard stance imo.

I may stand it up on another service at some point, but also anyone else is totally free to do that as well. There are no restrictions.