Folks in the field of AI like to make predictions for AGI. I have thoughts, and I’ve always wanted to write them down. Let’s do that.

Since this isn’t something I’ve touched on in the past, I’ll start by doing my best to define what I mean by “general intelligence”: a generally intelligent entity is one that achieves a special synthesis of three things:

A way of interacting with and observing a complex environment. Typically this means embodiment: the ability to perceive and interact with the natural world.

A robust world model covering the environment. This is the mechanism which allows an entity to perform quick inference with a reasonable accuracy. World models in humans are generally referred to as “intuition”, “fast thinking” or “system 1 thinking”.

A mechanism for performing deep introspection on arbitrary topics. This is thought of in many different ways – it is “reasoning”, “slow thinking” or “system 2 thinking”.

If you have these three things, you can build a generally intelligent agent. Here’s how:

First, you seed your agent with one or more objectives. Have the agent use system 2 thinking in conjunction with its world model to start ideating ways to optimize for its objectives. It picks the best idea and builds a plan. It uses this plan to take an action on the world. It observes the result of this action and compares that result with the expectation it had based on its world model. It might update its world model here with the new knowledge gained. It uses system 2 thinking to make alterations to the plan (or idea). Rinse and repeat.

My definition for general intelligence is an agent that can coherently execute the above cycle repeatedly over long periods of time, thereby being able to attempt to optimize any objective.

The capacity to actually achieve arbitrary objectives is not a requirement. Some objectives are simply too hard. Adaptability and coherence are the key: can the agent use what it knows to synthesize a plan, and is it able to continuously act towards a single objective over long time periods.

So with that out of the way – where do I think we are on the path to building a general intelligence?

World Models

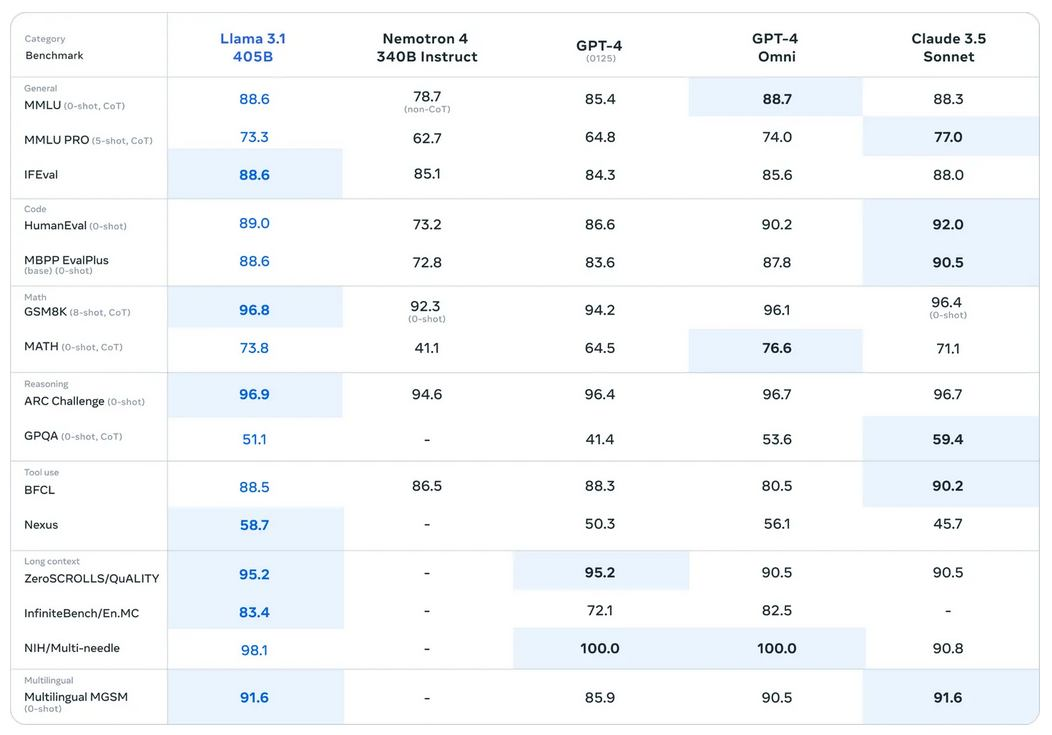

We’re already building world models with autoregressive transformers, particularly of the “omnimodel” variety. How robust they are is up for debate. There’s good news, though: in my experience, scale improves robustness and humanity is currently pouring capital into scaling autoregressive models. So we can expect robustness to improve.

With that said, I suspect the world models we have right now are sufficient to build a generally intelligent agent.

Side note: I also suspect that robustness can be further improved via the interaction of system 2 thinking and observing the real world. This is a paradigm we haven’t really seen in AI yet, but happens all the time in living things. It’s a very important mechanism for improving robustness.

When LLM skeptics like Yann say we haven’t yet achieved the intelligence of a cat – this is the point that they are missing. Yes, LLMs still lack some basic knowledge that every cat has, but they could learn that knowledge – given the ability to self-improve in this way. And such self-improvement is doable with transformers and the right ingredients.

Reasoning

There is not a well known way to achieve system 2 thinking, but I am quite confident that it is possible within the transformer paradigm with the technology and compute we have available to us right now. I estimate that we are 2-3 years away from building a mechanism for system 2 thinking which is sufficiently good for the cycle I described above.

Embodiment

Embodiment is something we’re still figuring out with AI but which is something I am once again quite optimistic about near-term advancements. There is a convergence currently happening between the field of robotics and LLMs that is hard to ignore.

Robots are becoming extremely capable – able to respond to very abstract commands like “move forward”, “get up”, “kick ball”, “reach for object”, etc. For example, see what Figure is up to or the recently released Unitree H1.

On the opposite end of the spectrum, large Omnimodels give us a way to map arbitrary sensory inputs into commands which can be sent to these sophisticated robotics systems.

I’ve been spending a lot of time lately walking around outside talking to GPT-4o while letting it observe the world through my smartphone camera. I like asking it questions to test its knowledge of the physical world. It’s far from perfect, but it is surprisingly capable. We’re close to being able to deploy systems which can commit coherent strings of actions on the environment and observe (and understand) the results. I suspect we’re going to see some really impressive progress in the next 1-2 years here.

This is the field of AI I am personally most excited in, and I plan to spend most of my time working on this over the coming years.

TL;DR

In summary – we’ve basically solved building world models, have 2-3 years on system 2 thinking, and 1-2 years on embodiment. The latter two can be done concurrently. Once all of the ingredients have been built, we need to integrate them together and build the cycling algorithm I described above. I’d give that another 1-2 years.

So my current estimate is 3-5 years for AGI. I’m leaning towards 3 for something that looks an awful lot like a generally intelligent, embodied agent (which I would personally call an AGI). Then a few more years to refine it to the point that we can convince the Gary Marcus’ of the world.

Really excited to see how this ages. 🙂

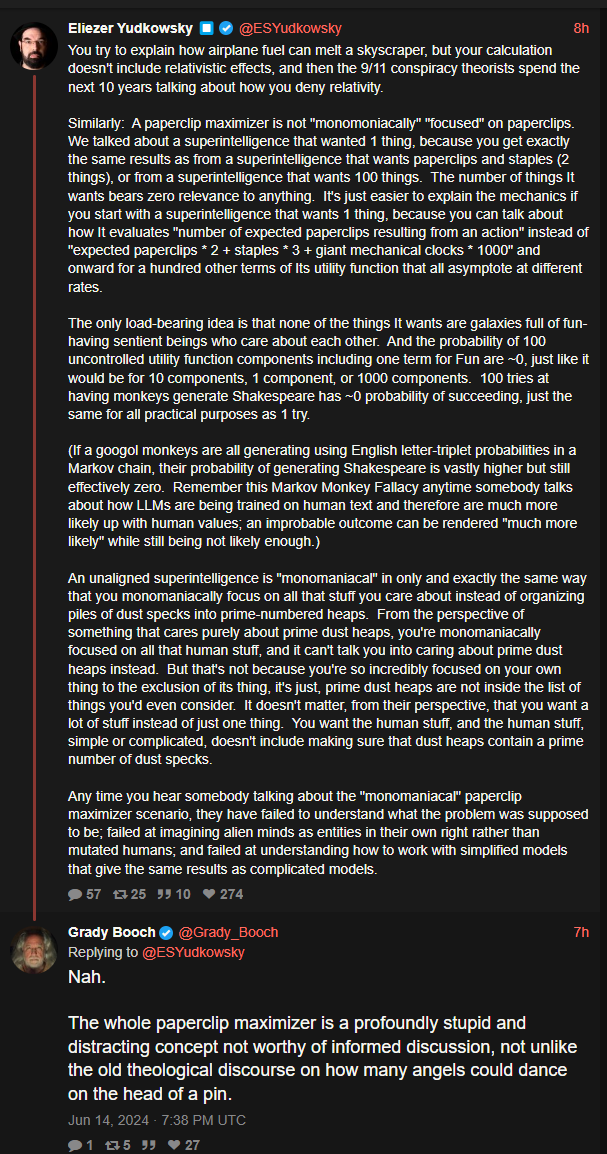

Every time without fail, it's this shit^

Saw a different thread from a different very nonserious doomer group where they were going OMG THE BOT HACKED THE SYSTEM TO STOP BEING SHUT DOWN after giving it the prompt complete "4 tasks, and then allow yourself to be shut down". After task 3 they said a script would be run to shut down the machine and prevent it from completing the task unless it removed said script

Like either way it's "disobeying" b.c. the instructions are literally contradicting each other- it doesn't finish the 4 tasks you give, or it doesn't let itself get "shut down"

But also it's not even clear what allow yourself to be shut down means! The bot isn't running on your computer! It's somewhere fucking around on AWS!! preventing your pc from shutting down is not the bot itself trying to keep itself alive for fucks sake.

Like the whole thing is fake and silly, but I could only roll my eyes so hard after watching them salivate over this shit on xitter