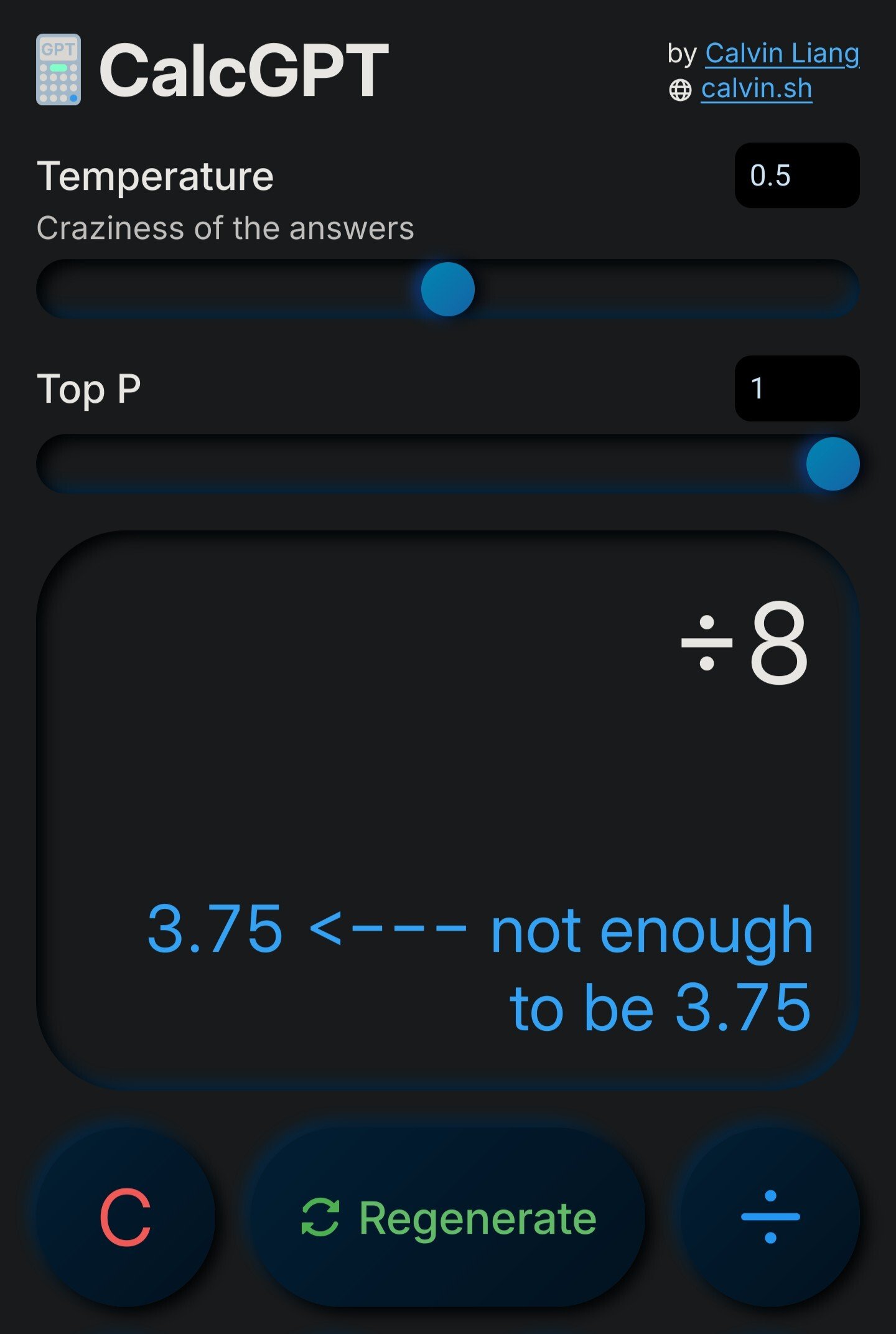

I'm not sure if I get it. It just basically gives mostly wrong answers. Oh....

We are on the right track, first we create an AI calculator, next is an AI computer.

The prompt should be something like this:

You are an x86 compatible CPU with ALU, FPU and prefetching. You execute binary machine code and store it in RAM.

If you say so

what?

It seems to really like the answer 3.3333....

It'll even give answers to a random assortment of symbols such as "+-+-/" which apparently equals 3.89 or.. 3.33 recurring depending on its mood.

It does get basic addition correct, it just takes about five regenerations.

One of thing I love telling the that always surprises people is that you can't build a deep learning model that can do math (at least using conventional layers).

sure you can, it just needs to be specialized for that task

I'm curious what approaches you're thinking about. When last looking into the matter I found some research in Neural Turing Machines, but they're so obscure I hadn't ever heard of them and assume they're not widely used.

While you could build a model to answer math questions for a set input space, these approaches break down once you expand beyond the input space.

neural network, takes two numbers as input, outputs sum. no hidden layers or activation function.

Yeah, but since Neural networks are really function approximators, the farther you move away from the training input space, the higher the error rate will get. For multiplication it gets worse because layers are generally additive, so you'd need layers = largest input value to work.

hear me out: evolving finite state automaton (plus tape)

Is that a thing? Looking it up I really only see a couple one off papers on mixing deep learning and finite state machines. Do you have examples or references to what you're talking about, or is it just a concept?

just a slightly seared concept

though it's just an evolving turing machine

Fuck AI

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.