view more: next ›

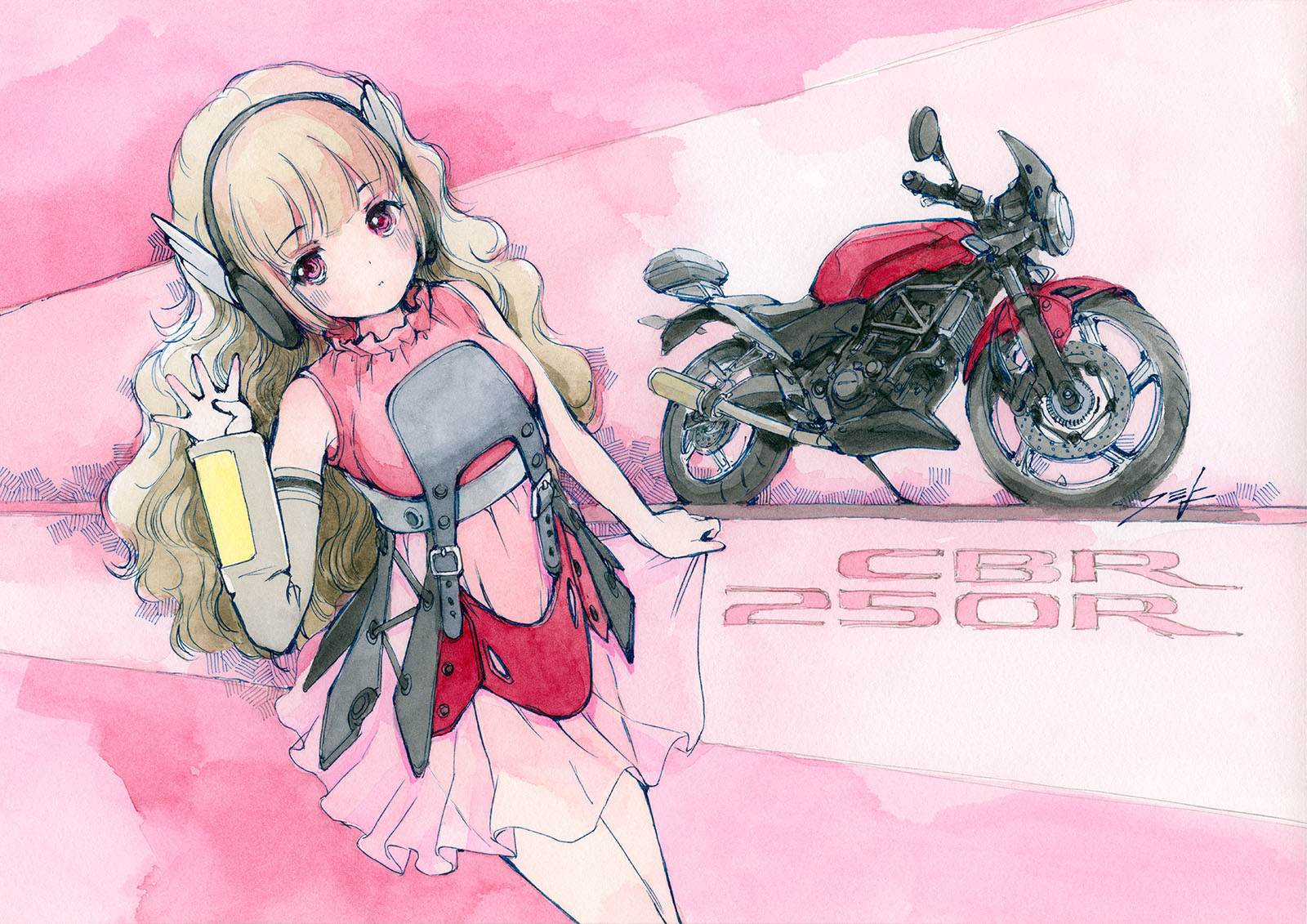

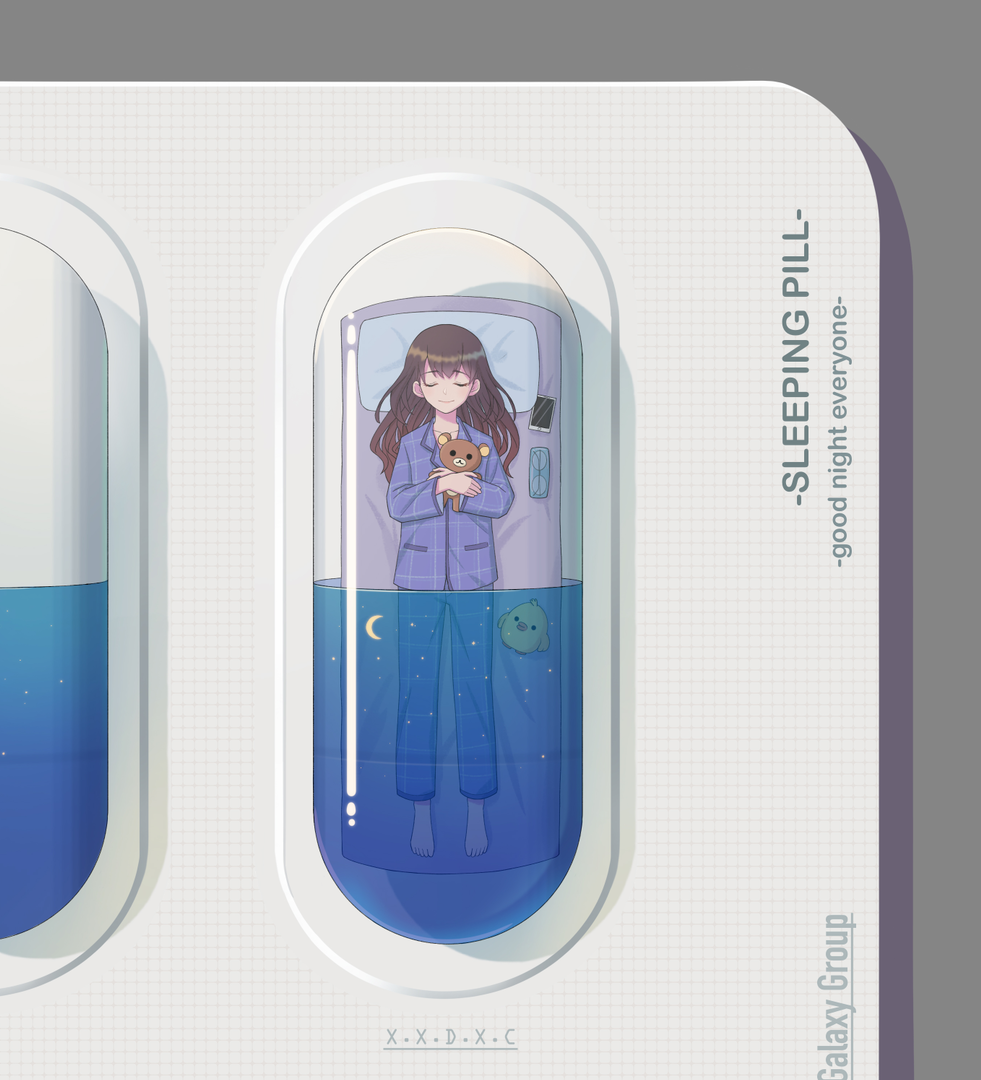

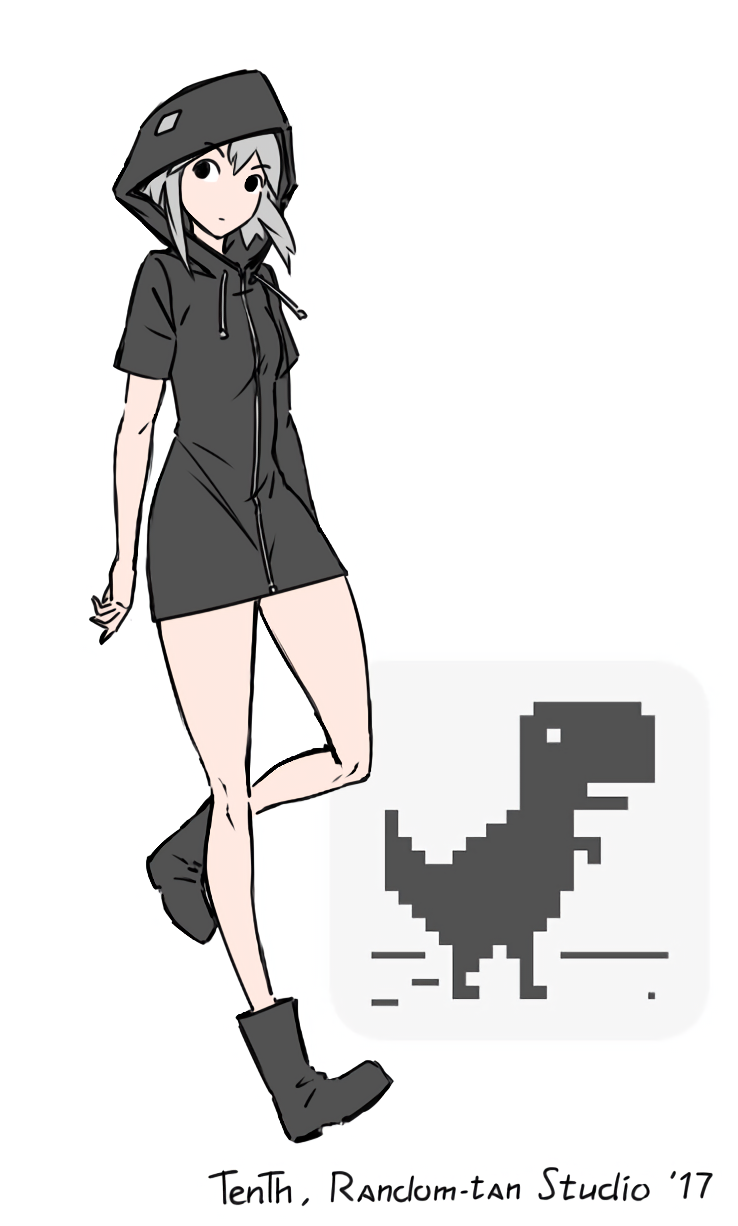

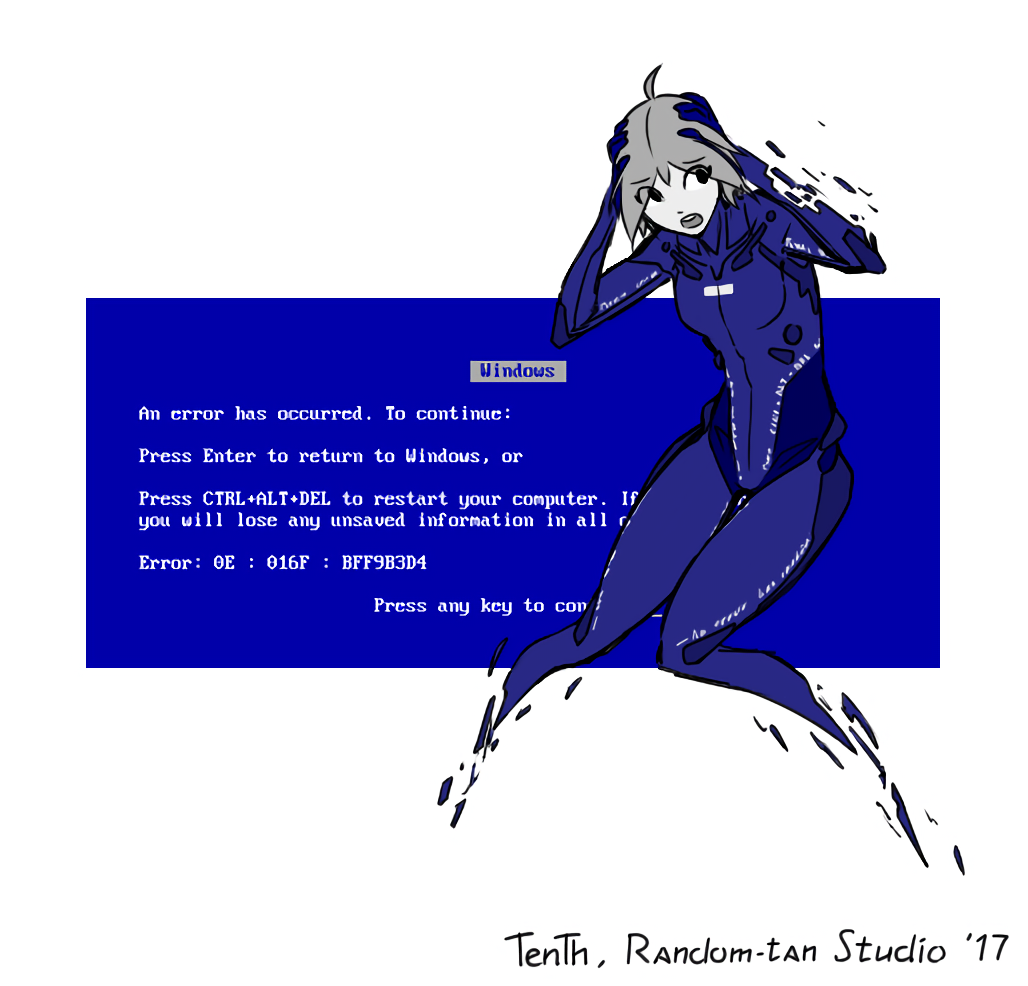

MorphMoe

227 readers

53 users here now

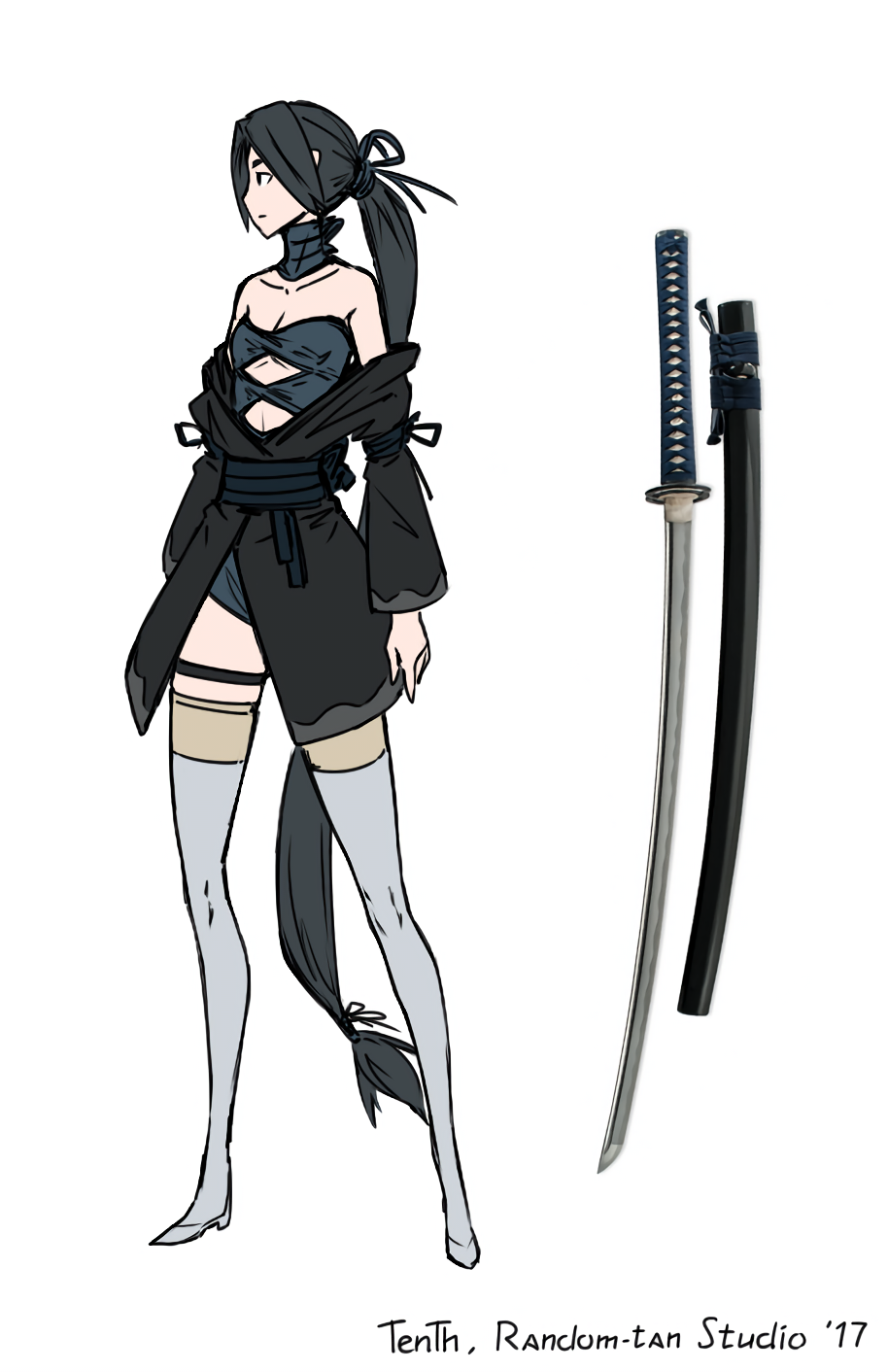

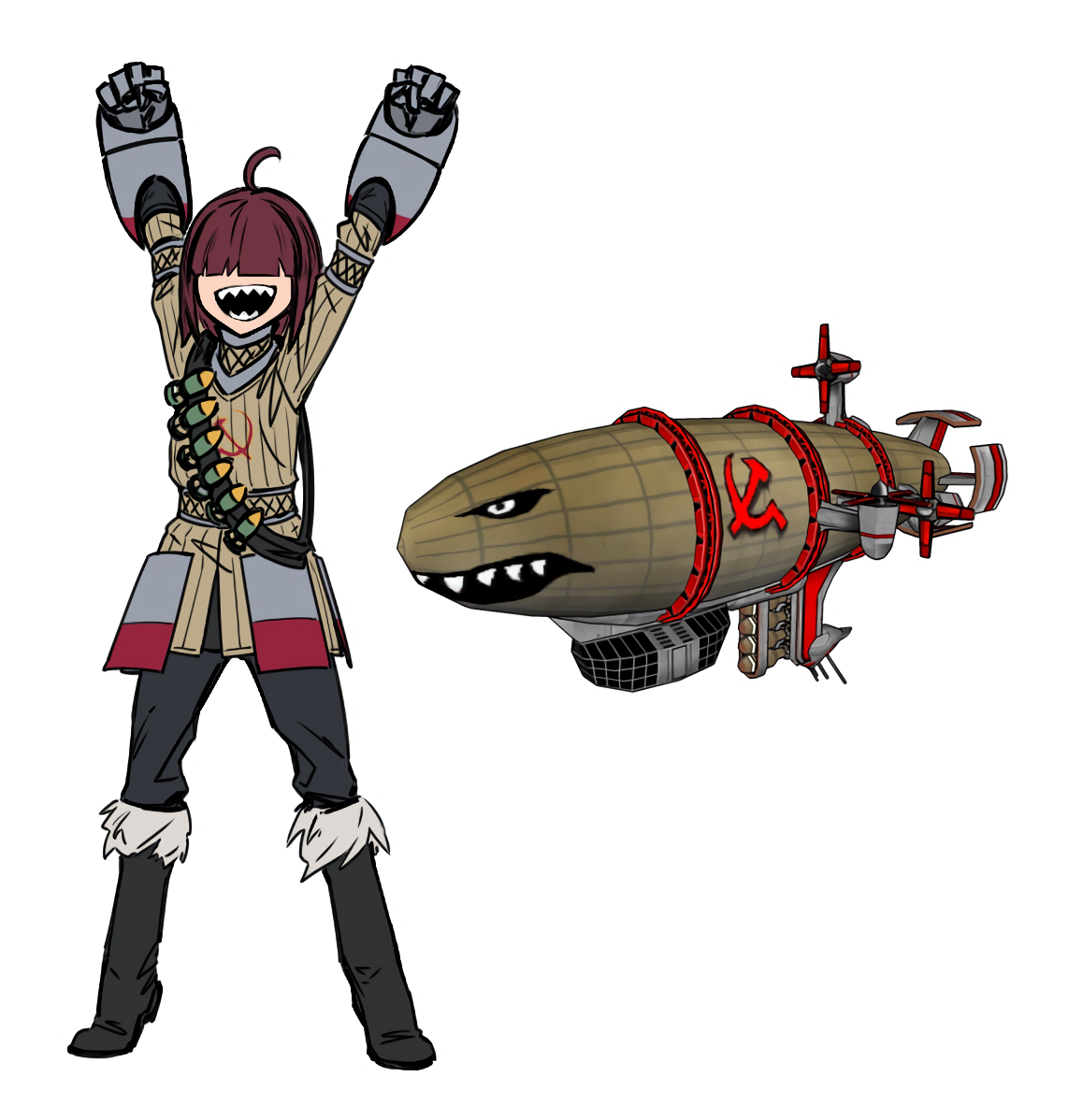

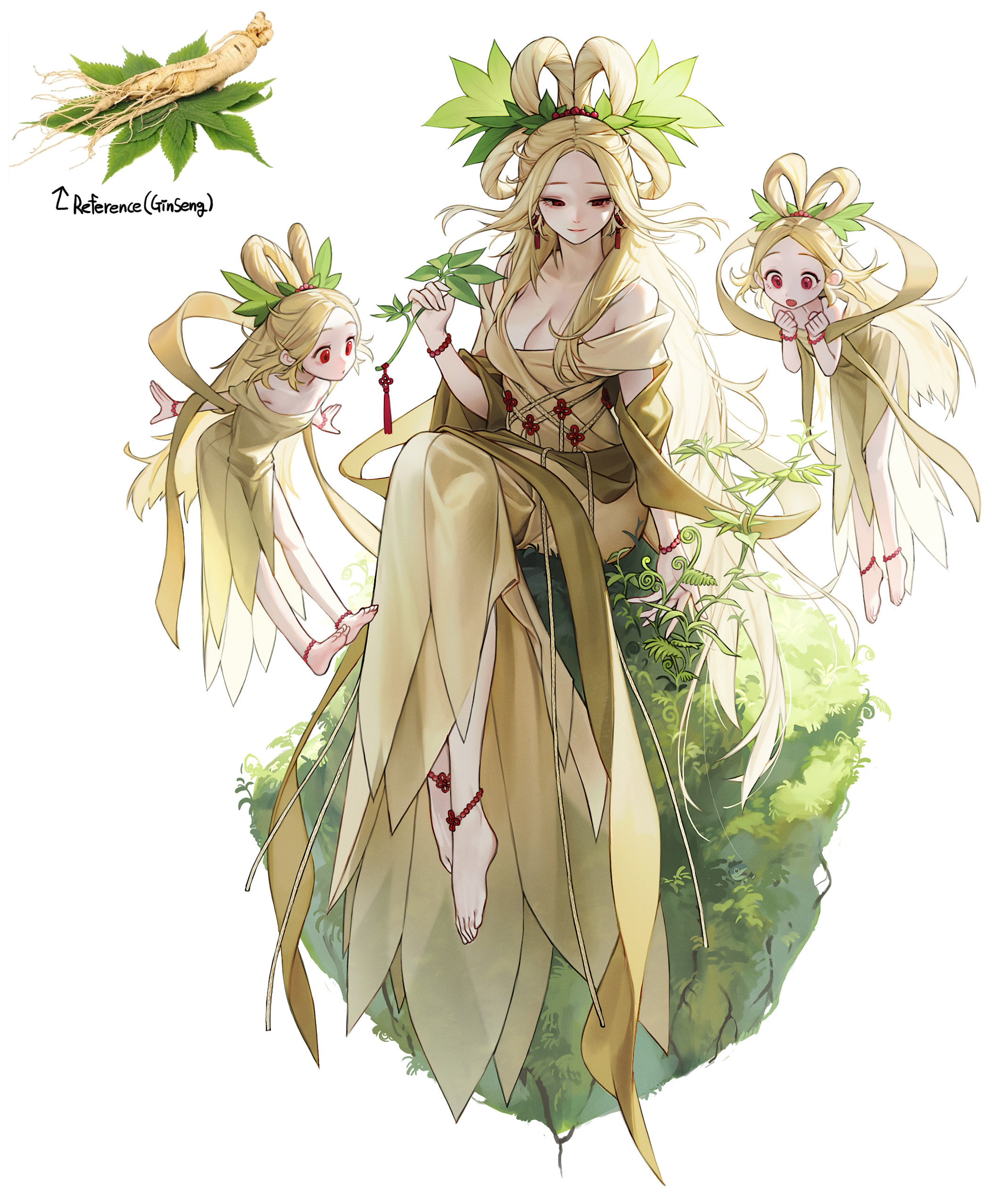

Anthropomorphized everyday objects etc. If it exists, someone has turned it into an anime-girl-or-guy.

- Posts must feature "morphmoe". Meaning non-sentient things turned into people.

- No nudity. Lewd art is fine, but mark it NSFW.

- If posting a more suggestive piece, or one with simply a lot of skin, consider still marking it NSFW.

- Include a link to the artist in post body, if you can.

- AI Generated content is not allowed.

- Positivity only. No shitting on the art, the artists, or the fans of the art/artist.

- Finally, all rules of the parent instance still apply, of course.

SauceNao can be used to effectively reverse search the creator of a piece, if you do not know it.

You may also leave the post body blanks or mention @saucechan@ani.social, in which case the bot will attempt to find and provide the source in a comment.

Find other anime communities which may interest you: Here

Other "moe" communities:

- !fitmoe@lemmy.world

- !murdermoe@lemmy.world

- !fangmoe@ani.social

- !cybermoe@ani.social

- !streetmoe@ani.social

- !midriffmoe@ani.social

- !kemonomoe@ani.social

- !officemoe@ani.social

- !meganemoe@ani.social

- !gothmoe@ani.social

- !militarymoe@ani.social

- !smolmoe@ani.social

founded 5 months ago

MODERATORS