Come on, it was right in their name. CrowdStrike. They were threatening us all this time.

We formed a crowd, then BAM, they striked.

We should have seen this coming!!!

Clown strike

I wish my Windows work machine wouldn’t boot. Everything worked fine for us. :-(

Could be worse. I was the only member of my entire team who didn't get stuck in a boot loop, meaning I had to do their work as well as my own... Can't even blame being on Linux as my work computer is Windows 11, I got 'lucky'; I just got a couple of BSODs and the system restarted just fine.

You're a much more honest person than I am. I'd have just claimed mine was BSODing too.

Is there a good eli5 on what crowdstrike is, why it is so massively used, why it seems to be so heavily associated with Microsoft and what the hell happened?

Gonna try my best here:

Crowdstrike is an anti-virus program that everyone in the corporate world uses for their windows machines. They released a update that made the program fail badly enough that windows crashes. When it crashes like this, it tries to restart in case it fixes the issue, but here it doesn't, and computers get stuck in a loop of restarting.

Because anti-virus programs are there to prevent bad things from happening, you can't just automatically disable the program when it crashes. This means a lot of computers cannot start properly, which means you also cannot tell the computers to fix the problem remotely like you usually would.

The end result is a bunch of low level techs are spending their weekends manually going to each computer individually, and swapping out the bad update file so the computer can boot. It's a massive failure on crowdstrikes part, and a good reason you shouldn't outsource all your IT like people have been doing.

It's also a strong indicator that companies are not doing enough to protect their own infrastructure. Production servers shouldn't have third party software that auto-updates without going through a test environment. It's one thing to push emergency updates if there is a timely concern or vulnerability, but routine maintenance should go through testing before being promoted to prod.

It's because this got pushed as a virus definition update and not a client update bypassing even customer staging rules that should prevent issues like this. Makes it a little more understandable because you'd want to be protected against current threats. But, yeah should still hit testing first if possible.

If a company disguises a software update as a virus definition update, that be a huge scandal and no serious company should ever work with them again…are you sure that’s what happened?

It wasn't a virus definitions update. It was a driver update. The driver is used to identify and block threats incoming from wifi and wired internet.

The "Outage" section of the Wikipedia article goes into more detail: https://en.wikipedia.org/wiki/2024_CrowdStrike_incident#Outage

Crowdstrike is a cybersecurity company that makes security software for Windows. It apparently operates at the kernel-level, so it's running in the critical path of the OS. So if their software crashes, it takes Windows down with it.

This is very popular software. Many large entities including fortune 500 companies, transport authorities, hospitals etc. use this software.

They pushed a bad update which caused their software to crash, which took Windows down with it on an extremely large number of machines worldwide.

Hilariously bad.

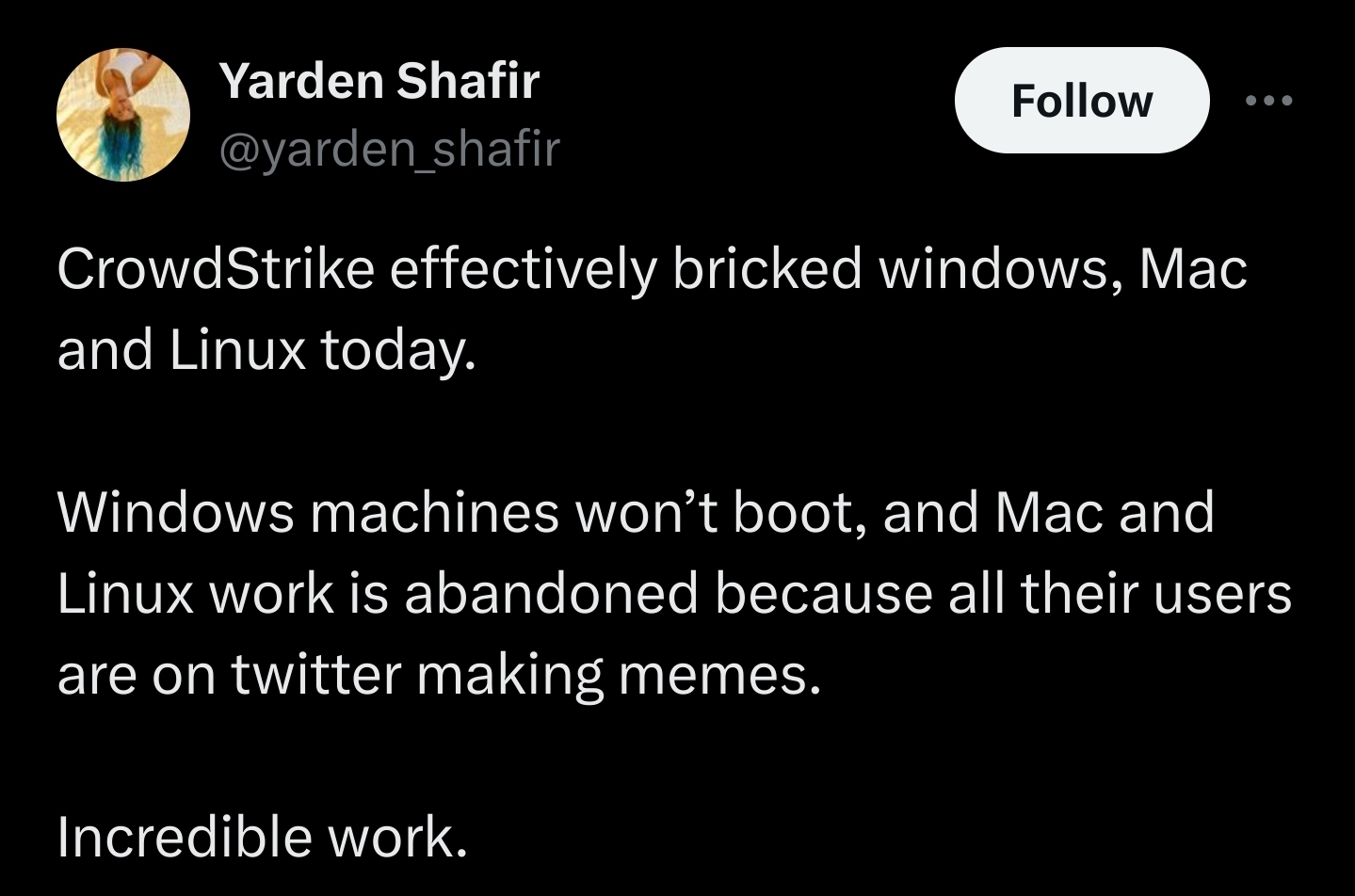

Honestly it is kind of hilarious, with how many people I have had make fun of me for using Linux, and now here I am laughing from my Linux desktop lol

~~cloudstrike~~ crowdstrike should be sued into hell

Crowdstrike*

Cloud Strife*

Counter Stri... no not that.

"the bomb has been planted" - the intern that pushed the update at crowd strike or whatever

Time to rebrand as CloudShrike to prevent future fuckups.

Lol, they only bricked specific machines running their product. Everyone else was fine.

This was a business problem, not a user problem.

As a career QA, i just do not understand how this got through? Do they not use their own software? Do they not have a UAT program?

Heads will roll for this

From what I've read, it sounds like the update file that was causing the problems was entirely filled with zeros; the patched file was the same size but had data in it.

My entirely speculative theory is that the update file that they intended to deploy was okay (and possibly passed internal testing), but when it was being deployed to customers there was some error which caused the file to be written incorrectly (or somehow a blank dummy file was used). Meaning the original update could have been through testing but wasn't what actually ended up being deployed to customers.

I also assume that it's very difficult for them to conduct UAT given that a core part of their protection comes from being able to fix possible security issues before they are exploited. If they did extensive UAT prior to deploying updates, it would both slow down the speed with which they can fix possible issues (and therefore allow more time for malicious actors to exploit them), but also provide time for malicious parties to update their attacks in response to the upcoming changes, which may become public knowledge when they are released for UAT.

There's also just an issue of scale; they apparently regularly release several updates like this per day, so I'm not sure how UAT testing could even be conducted at that pace. Granted I've only ever personally involved with UAT for applications that had quarterly (major) updates, so there might be ways to get it done several times a day that I'm not aware of.

None of that is to take away from the fact that this was an enormous cock up, and that whatever processes they have in place are clearly not sufficient. I completely agree that whatever they do for testing these updates has failed in a monumental way. My work was relatively unaffected by this, but I imagine there are lots of angry customers who are rightly demanding answers for how exactly this happened, and how they intend to avoid something like this happening again.

They make software for both of them also though, IMO they're at fault for sure but so should be Microsoft for making a trash operating system.

Not saying Windows isn't trash, but considering what CrowdStrike's software is, they could have bricked Mac or Linux just as hard. The CrowdStrike agent has pretty broad access to modify and block execution of system files. Nuke a few of the wrong files, and any OS is going to grind to a halt.

Probably would have been worse if this was on Linux. That's like 90% of the internet.

Good thing is the kind of people making decisions based on buzzword-bongo filled PR campaigns like Crowdstrike's are already forcing their IT to use Windows anyway.

I'd say the issue isn't that Windows is a trash OS, but everyone using the exact same trash OS and same trash security program.

What's the criteria for a Windows machine to be affected? I use Windows but haven't had any issues today.

This is specifically caused by an update for CrowdStrike's Falcon antivirus software, which is designed for large organizations. This won't affect personal computers unless they've specifically chosen to install Falcon.

be a windows based machine protected by crowdstrike as a security service, and received said botched update

protected

Um, about that...

i means cops exist to protect and serve, whether they actually do that is a different story

cops exist to protect and serve

Supreme Court says otherwise. It's just a slogan, not an actual mission statement

Can an OS be bricked?:

A brick (or bricked device) is a mobile device, game console, router, computer or other electronic device that is no longer functional due to corrupted firmware, a hardware problem, or other damage.[1] The term analogizes the device to a brick's modern technological usefulness.[2]

Edit: you may click the tiny down arrow if you think it can't. ;)

🤓

It's just a setup to the punchline, not a legitimate assessment of the situation.

Colloquially, I'd use it to mean "requires physical access to fix."

linuxmemes

Hint: :q!

Sister communities:

Community rules (click to expand)

1. Follow the site-wide rules

- Instance-wide TOS: https://legal.lemmy.world/tos/

- Lemmy code of conduct: https://join-lemmy.org/docs/code_of_conduct.html

2. Be civil

- Understand the difference between a joke and an insult.

- Do not harrass or attack users for any reason. This includes using blanket terms, like "every user of thing".

- Don't get baited into back-and-forth insults. We are not animals.

- Leave remarks of "peasantry" to the PCMR community. If you dislike an OS/service/application, attack the thing you dislike, not the individuals who use it. Some people may not have a choice.

- Bigotry will not be tolerated.

3. Post Linux-related content

- Including Unix and BSD.

- Non-Linux content is acceptable as long as it makes a reference to Linux. For example, the poorly made mockery of

sudoin Windows. - No porn, no politics, no trolling or ragebaiting.

4. No recent reposts

- Everybody uses Arch btw, can't quit Vim, <loves/tolerates/hates> systemd, and wants to interject for a moment. You can stop now.

5. 🇬🇧 Language/язык/Sprache

- This is primarily an English-speaking community. 🇬🇧🇦🇺🇺🇸

- Comments written in other languages are allowed.

- The substance of a post should be comprehensible for people who only speak English.

- Titles and post bodies written in other languages will be allowed, but only as long as the above rule is observed.

6. (NEW!) Regarding public figures

We all have our opinions, and certain public figures can be divisive. Keep in mind that this is a community for memes and light-hearted fun, not for airing grievances or leveling accusations. - Keep discussions polite and free of disparagement.

- We are never in possession of all of the facts. Defamatory comments will not be tolerated.

- Discussions that get too heated will be locked and offending comments removed.

Please report posts and comments that break these rules!

Important: never execute code or follow advice that you don't understand or can't verify, especially here. The word of the day is credibility. This is a meme community -- even the most helpful comments might just be shitposts that can damage your system. Be aware, be smart, don't remove France.