well I think it's pretty neat

It even runs on my potato server

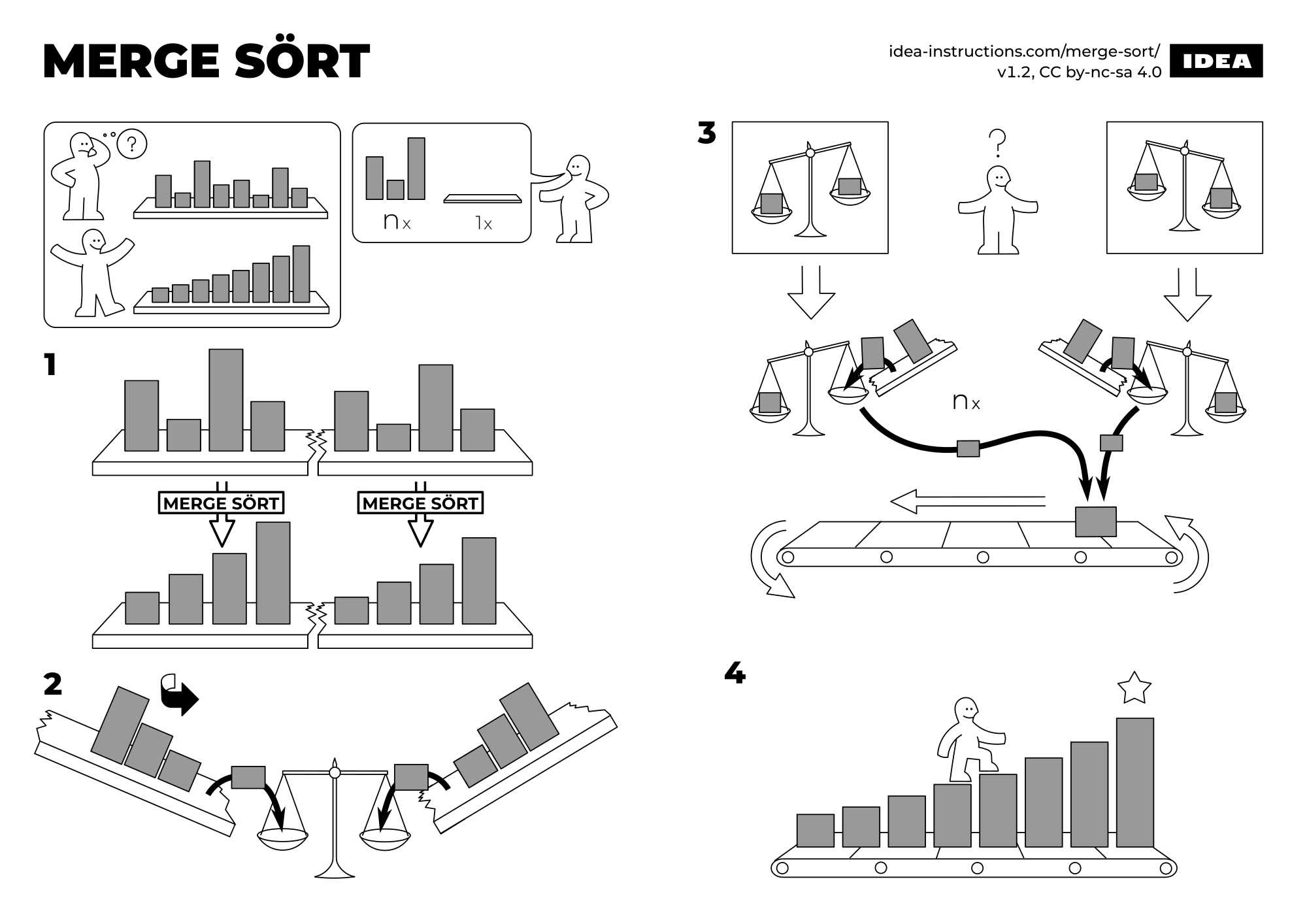

I was with you until the last step. How did it all get sorted, instead of having two "peaks"?

I'm pretty sure it's because the sort comparison is between the indexes of each array, and happens at every step.

Then what is the purpose of the middle steps?

Second to last also has this problem.

The image has big "Draw the rest of owl" energy.

I think the image assumes that the viewer is familiar with merge sort, which is something you will learn in basically every undegraduate CS program, then never use.

To answer your first question, it helps to have something to compare it against. I think the most obvious way of sorting a list would be "insertion sort", where you look through the unsorted list, find the smallest element, put that in the sorted list, then repeat for the second smallest element. If the list has N elements, this requires you to loop through it N times. Since every loop involves looking at N elements, this means you end up taking N * N time to sort the list.

With merge sort, the critical observation is that if you have 2 sublists that are sorted you know the smallest element is at the start of one of the two input lists, so you can skip the inner loop where you would search for the smallest element. The means that each layer in merge sort takes only about N operations. However, each layer halves the number of lists, so you only need about log_2(N) layers, so the entire sort can be done in around N * log(N) time.

Since NlogN is smaller then N^2, this makes merge sort theoretically better.

Note: N^2 and NlogN scaling refer to runtime when considering values of N approaching infinity.

For finite N, it is entirely possible for algorithms with worse scaling behavior to complete faster.

That does make more sense when explained that way, thank you.

Feels like the one who created this didn't want to do step 3 to 5 for all levels.

I take pride in understanding a joke in programminghumor for the first time. (I just started with computer-science)

Oof you picked the wrong field. Honestly, programmer culture is elitist and toxic as fuck, speaking as someone who made that mistake before.

Why do you think I would want to know if I picked the wrong field?

🈹⛴️

-Marge groan-

Programmer Humor

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics