The results of this new GSM-Symbolic paper aren't completely new in the world of AI research. Other recent papers have similarly suggested that LLMs don't actually perform formal reasoning and instead mimic it with probabilistic pattern-matching of the closest similar data seen in their vast training sets.

WTF kind of reporting is this, though? None of this is recent or new at all, like in the slightest. I am shit at math, but have a high level understanding of statistical modeling concepts mostly as of a decade ago, and even I knew this. I recall a stats PHD describing models as "stochastic parrots"; nothing more than probabilistic mimicry. It was obviously no different the instant LLM's came on the scene. If only tech journalists bothered to do a superficial amount of research, instead of being spoon fed spin from tech bros with a profit motive...

It's written as if they literally expected AI to be self reasoning and not just a mirror of the bullshit that is put into it.

Probably because that's the common expectation due to calling it "AI". We're well past the point of putting the lid back on that can of worms, but we really should have saved that label for... y'know... intelligence, that's artificial. People think we've made an early version of Halo's Cortana or Star Trek's Data, and not just a spellchecker on steroids.

The day we make actual AI is going to be a really confusing one for humanity.

…a spellchecker on steroids.

Ask literally any of the LLM chat bots out there still using any headless GPT instances from 2023 how many Rs there are in “strawberry,” and enjoy. 🍓

This problem is due to the fact that the AI isnt using english words internally, it's tokenizing. There are no Rs in {35006}.

describing models as “stochastic parrots”

That is SUCH a good description.

Clearly this sort of reporting is not prevalent enough given how many people think we have actually come up with something new these last few years and aren't just throwing shitloads of graphics cards and data at statistical models

*starts sweating

Look at that subtle pixel count, the tasteful colouring... oh my god, it's even transparent...

One time I exposed deep cracks in my calculator's ability to write words with upside down numbers. I only ever managed to write BOOBS and hELLhOLE.

LLMs aren't reasoning. They can do some stuff okay, but they aren't thinking. Maybe if you had hundreds of them with unique training data all voting on proposals you could get something along the lines of a kind of recognition, but at that point you might as well just simulate cortical columns and try to do Jeff Hawkins' idea.

LLMs aren't reasoning. They can do some stuff okay, but they aren't thinking

and the more people realize it, the better. which is why it's good that a research like that from a reputable company makes headlines.

Did anyone believe they had the ability to reason?

People are stupid OK? I've had people who think that it can in fact do math, "better than a calculator"

Like 90% of the consumers using this tech are totally fine handing over tasks that require reasoning to LLMs and not checking the answers for accuracy.

I still believe they have the ability to reason to a very limited capacity. Everyone says that they're just very sophisticated parrots, but there is something emergent going on. These AIs need to have a world-model inside of themselves to be able to parrot things as correctly as they currently do (yes, including the hallucinations and the incorrect answers). Sure they are using tokens instead of real dictionary words, which comes with things like the strawberry problem, but just because they are not nearly as sophisticated as us doesnt mean there is no reasoning happening.

We are not special.

It's an illusion. People think that because the language model puts words into sequences like we do, there must be something there. But we know for a fact that it is just word associations. It is fundamentally just predicting the most likely next word and generating it.

If it helps, we have something akin to an LLM inside our brain, and it does the same limited task. Our brains have distinct centres that do all sorts of recognition and generative tasks, including images, sounds and languge. We've made neural networks that do these tasks too, but the difference is that we have a unifying structure that we call "consciousness" that is able to grasp context, and is able to loopback the different centres into one another to achieve all sorts of varied results.

So we get our internal LLM to sequence words, one word after another, then we loop back those words via the language recognition centre into the context engine, so it can check if the words match the message it intended to create, it checks them against its internal model of the world. If there's a mismatch, it might ask for different words till it sees the message it wanted to see. This can all be done very fast, and we're barely aware of it. Or, if it's feeling lazy today, it might just blurt out the first sentence that sprang to mind and it won't make sense, and we might call that a brain fart.

Back in the 80s "automatic writing" took off, which was essentially people tapping into this internal LLM and just letting the words flow out without editing. It was nonesense, but it had this uncanny resemblance to human language, and people thought they were contacting ghosts, because obviously there has to be something there, right? But it's not, it's just that it sounds like people.

These LLMs only produce text forwards, they have no ability to create a sentence, then examine that sentence and see if it matches some internal model of the world. They have no capacity for context. That's why any question involving A inside B trips them up, because that is fundamentally a question about context. "How many Ws in the sentence "Howard likes strawberries" is a question about context, that's why they screw it up.

I don't think you solve that without creating a real intelligence, because a context engine would necessarily be able to expand its own context arbitrarily. I think allowing an LLM to read its own words back and do some sort of check for fidelity might be one way to bootstrap a context engine into existence, because that check would require it to begin to build an internal model of the world. I suspect the processing power and insights required for that are beyond us for now.

If the only thing you feed an AI is words, then how would it possibly understand what these words mean if it does not have access to the things the words are referring to?

If it does not know the meaning of words, then what can it do but find patterns in the ways they are used?

This is a shitpost.

We are special, I am in any case.

So do I every time I ask it a slightly complicated programming question

And sometimes even really simple ones.

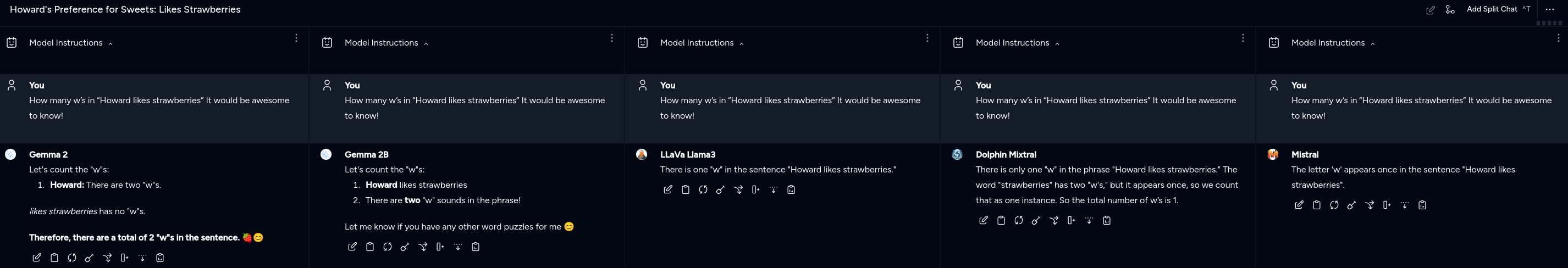

How many w's in "Howard likes strawberries" It would be awesome to know!

So I keep seeing people reference this... And I found it curious of a concept that LLMs have problems with this. So I asked them... Several of them...

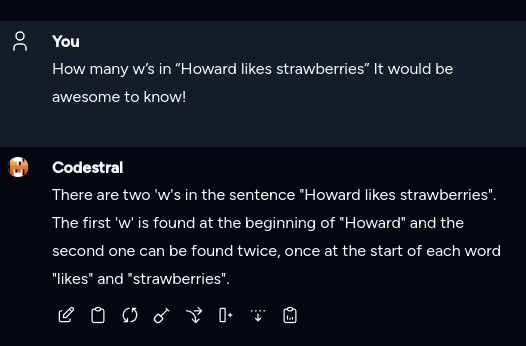

Outside of this image... Codestral ( my default ) got it actually correct and didn't talk itself out of being correct... But that's no fun so I asked 5 others, at once.

What's sad is that Dolphin Mixtral is a 26.44GB model...

Gemma 2 is the 5.44GB variant

Gemma 2B is the 1.63GB variant

LLaVa Llama3 is the 5.55 GB variant

Mistral is the 4.11GB Variant

So I asked Codestral again because why not! And this time it talked itself out of being correct...

Edit: fixed newline formatting.

The tested LLMs fared much worse, though, when the Apple researchers modified the GSM-Symbolic benchmark by adding "seemingly relevant but ultimately inconsequential statements" to the questions

Good thing they're being trained on random posts and comments on the internet, which are known for being succinct and accurate.

Yeah, especially given that so many popular vegetables are members of the brassica genus

statistical engine suggesting words that sound like they'd probably be correct is bad at reasoning

How can this be??

I would say that if anything, LLMs are showing cracks in our way of reasoning.

Or the problem with tech billionaires selling "magic solutions" to problems that don't actually exist. Or how people are too gullible in the modern internet to understand when they're being sold snake oil in the form of "technological advancement" when it's actually just repackaged plagiarized material.

They are large LANGUAGE models. It's no surprise that they can't solve those mathematical problems in the study. They are trained for text production. We already knew that they were no good in counting things.

Are you telling me Apple hasn't seen through the grift and is approaching this with an open mind just to learn how full off bullshit most of the claims from the likes of Altman are? And now they're sharing their gruesome discoveries with everyone while they're unveiling them?

I would argue that Apple Intelligence™️ is evidence they never bought the grift. It's very focused on tailored models scoped to the specific tasks that AI does well; creative and non-critical tasks like assisting with text processing/transforming, image generation, photo manipulation.

The Siri integrations seem more like they're using the LLM to stitch together the API's that were already exposed between apps (used by shortcuts, etc); each having internal logic and validation that's entirely programmed (and documented) by humans. They market it as a whole lot more, but they market every new product as some significant milestone for mankind ... even when it's a feature that other phones have had for years, but in an iPhone!

The fun part isn't even what Apple said - that the emperor is naked - but why it's doing it. It's nice bullet against all four of its GAFAM competitors.

This right here, this isn't conscientious analysis of tech and intellectual honesty or whatever, it's a calculated shot at it's competitors who are desperately trying to prevent the generative AI market house of cards from falling

They're a publicly traded company.

Their executives need something to point to to be able to push back against pressure to jump on the trend.

cracks? it doesn't even exist. we figured this out a long time ago.

I feel like a draft landed on Tim's desk a few weeks ago, explains why they suddenly pulled back on OpenAI funding.

People on the removed superfund birdsite are already saying Apple is missing out on the next revolution.

I hope this gets circulated enough to reduce the ridiculous amount of investment and energy waste that the ramping-up of "AI" services has brought. All the companies have just gone way too far off the deep end with this shit that most people don't even want.

People working with these technologies have known this for quite awhile. It's nice of Apple's researchers to formalize it, but nobody is really surprised-- Least of all the companies funnelling traincars of money into the LLM furnace.

They predict, not reason....

Here's the cycle we've gone through multiple times and are currently in:

AI winter (low research funding) -> incremental scientific advancement -> breakthrough for new capabilities from multiple incremental advancements to the scientific models over time building on each other (expert systems, LLMs, neutral networks, etc) -> engineering creates new tech products/frameworks/services based on new science -> hype for new tech creates sales and economic activity, research funding, subsidies etc -> (for LLMs we're here) people become familiar with new tech capabilities and limitations through use -> hype spending bubble bursts when overspend doesn't keep up with infinite money line goes up or new research breakthroughs -> AI winter -> etc...

Are we not flawed too? Does that not makes AI...human?

How dare you imply that humans just make shit up when they don't know the truth

Real headline: Apple research presents possible improvements in benchmarking LLMs.

Not even close. The paper is questioning LLMs ability to reason. The article talks about fundamental flaws of LLMs and how we might need different approaches to achieve reasoning. The benchmark is only used to prove the point. It is definitely not the headline.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.